Streamflow decomposition with mgcv

In this notebook we will explore the use of Generalised Additive Models (GAMs) for timeseries data using the mgcv package. There are many great resources on GAMs (see the “Further reading” section for a start), the idea of this notebook is to really guide readers towards these more comprehensive works. To this end we will show the power of GAMs and some of the potential pitfalls (in particular when it comes to timeseries data) in an example that will hopefully resonate more for those with a background in water.

To make things easier we will be using synthetic data which will allow us to explore the GAM fitting in a controlled way. We will be using a synthetic streamflow dataset which we aim to disentangle into the various components which contribute the overall signal.

As a strand to follow through the analysis, we will set the aim of find the underlying time trend that is present beyond the influence that can be accounted for between the other climatic variables.

1 A short word on GAMs

GAMs are an extension on generalised linear models to incorporate nonlinear functions of the input variables. We will see a general equation below but the important parts we need to remember for our purposes are:

- we are trying to model streamflow which is our response/target variable (\(y\)) using some covariates (input variables) (\(x_m\))

- we do this by finding some functions which perform some linear or nonlinear transformation the covariates and add the resulting outputs together (our sum of \(f_m(x_m)\))

- these functions are called “smooths” in GAM world and are usually penalised regression splines - a flexible way to fit our nonlinear relationships

- we conveniently ignore the link function here which can be used to change of our additive model to the scale of the response variable if needed - we will go into this more below

- we assume that our model fits our observations with gaussian residuals of standard deviation \(\sigma\) - \(\mathcal{N}(0,\sigma^2)\)

- if you’re new to splines/smooths this will all make more sense as we proceed and start plotting them!

\[y=\alpha + \sum_{m}^{M}f_m\left(x_m\right) + \epsilon \qquad \epsilon \sim \mathcal{N}(0,\sigma^2)\]

I think that is probably enough to do what we need to do below, but again there are many many great resources on GAMs that you should check out. Some of these are listed in the “further reading” section.

2 Load covariates

First lets load the packages we will need and then the prepared data.

library(tidyverse) # Standard

library(mgcv) # Package for fitting GAMs

library(gratia) # Package for plotting GAMs

library(patchwork) # Package for arranging plots

source('functions/adj_gam_sim.R')

source('functions/plotting.R')Load processed synthetic streamflow data (created using 00_synthetic_timeseries_generation.Rmd which is available in the github repo).

set.seed(2913)

streamflow_data <- read_csv(

paste("synthetic_data",paste0("synthetic_streamflow_data.csv"), sep = .Platform$file.sep)

)Rows: 492 Columns: 8

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

dbl (7): rain, SOI, year, moy, time, log_flow, flow

date (1): month

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.head(streamflow_data)# A tibble: 6 × 8

month rain SOI year moy time log_flow flow

<date> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1983-01-01 0.0641 0.0447 1983 0.0833 0 -0.786 0.456

2 1983-02-01 0.0755 0 1983 0.167 0.00204 -0.997 0.369

3 1983-03-01 0.467 0.0877 1983 0.25 0.00407 2.36 10.6

4 1983-04-01 0.237 0.270 1983 0.333 0.00611 1.32 3.74

5 1983-05-01 0.306 0.651 1983 0.417 0.00815 2.44 11.4

6 1983-06-01 0.118 0.5 1983 0.5 0.0102 1.02 2.76 2.1 Explore the data

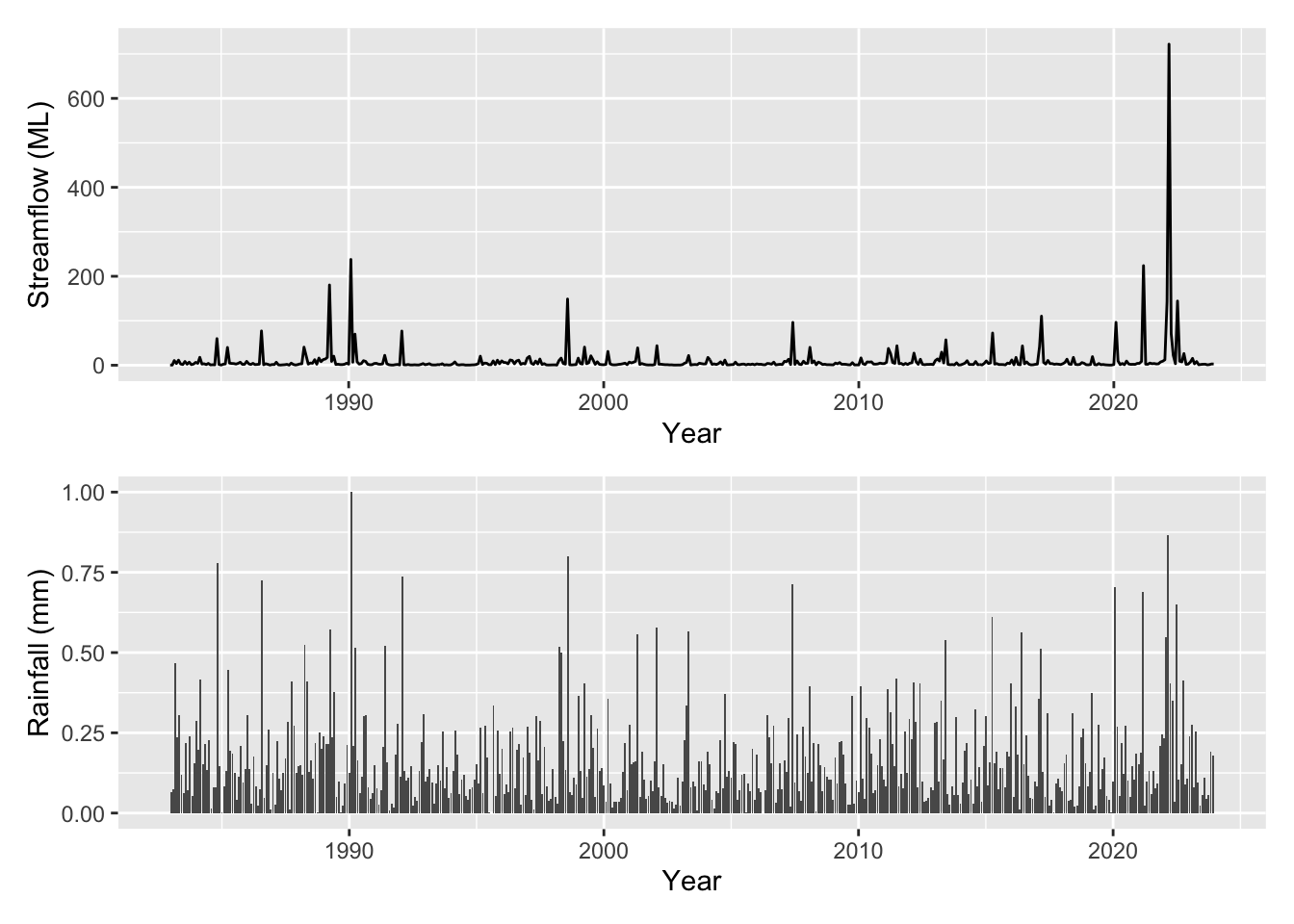

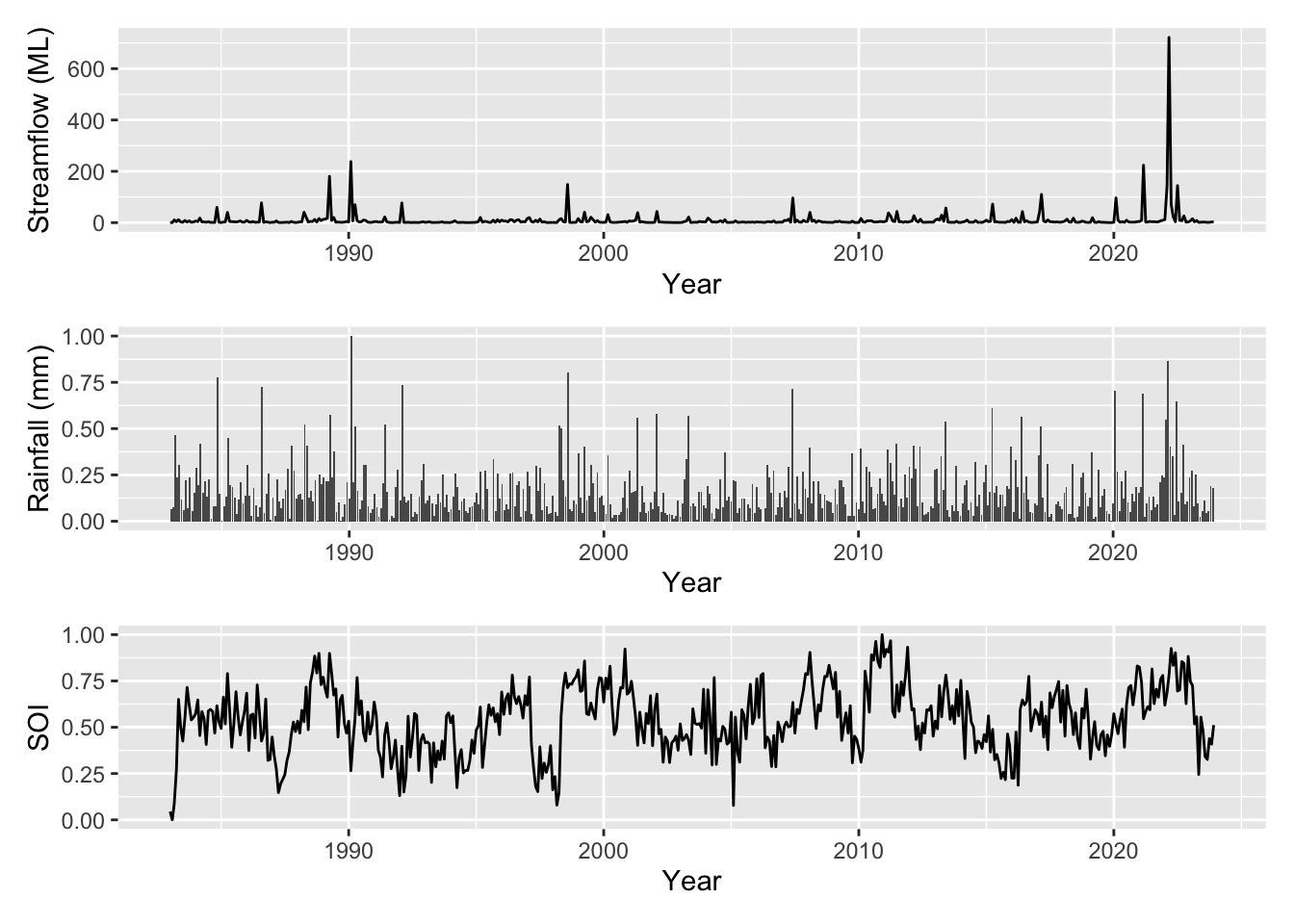

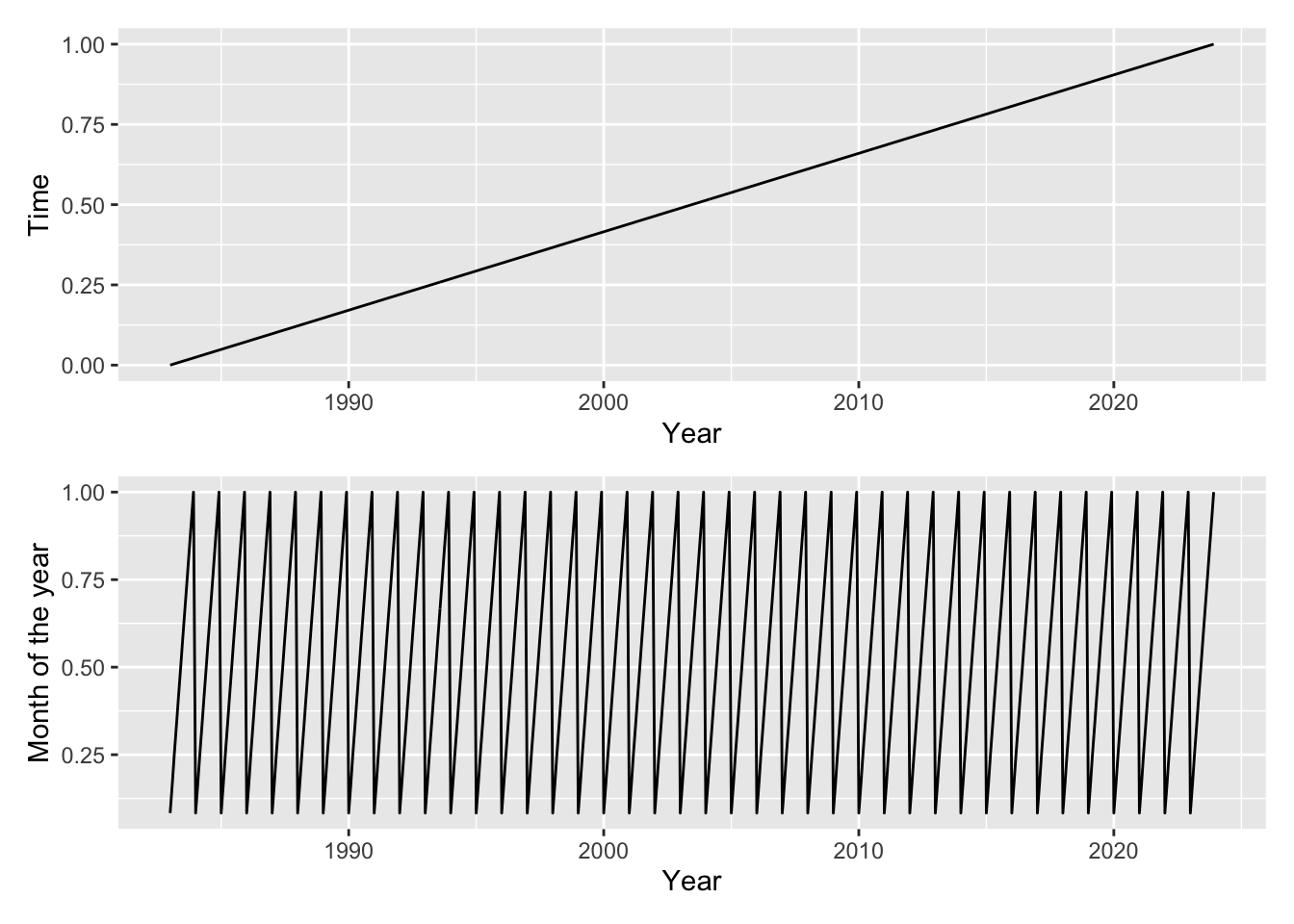

Lets plot the variables (both the target and the covariates) that we have available to us (all monthly):

rainfall: mm monthly sumSOI: Southern Oscillation Indexmoy: month of the year (1-12 before scaling)time: months from start of the seriesflow: monthly mean streamflow (target)log_flow: log of target

You can see that the target variable flow is given in ML, however the covariates have all been scaled to be between 0 and 1. Lets plot these up and see what we are working with.

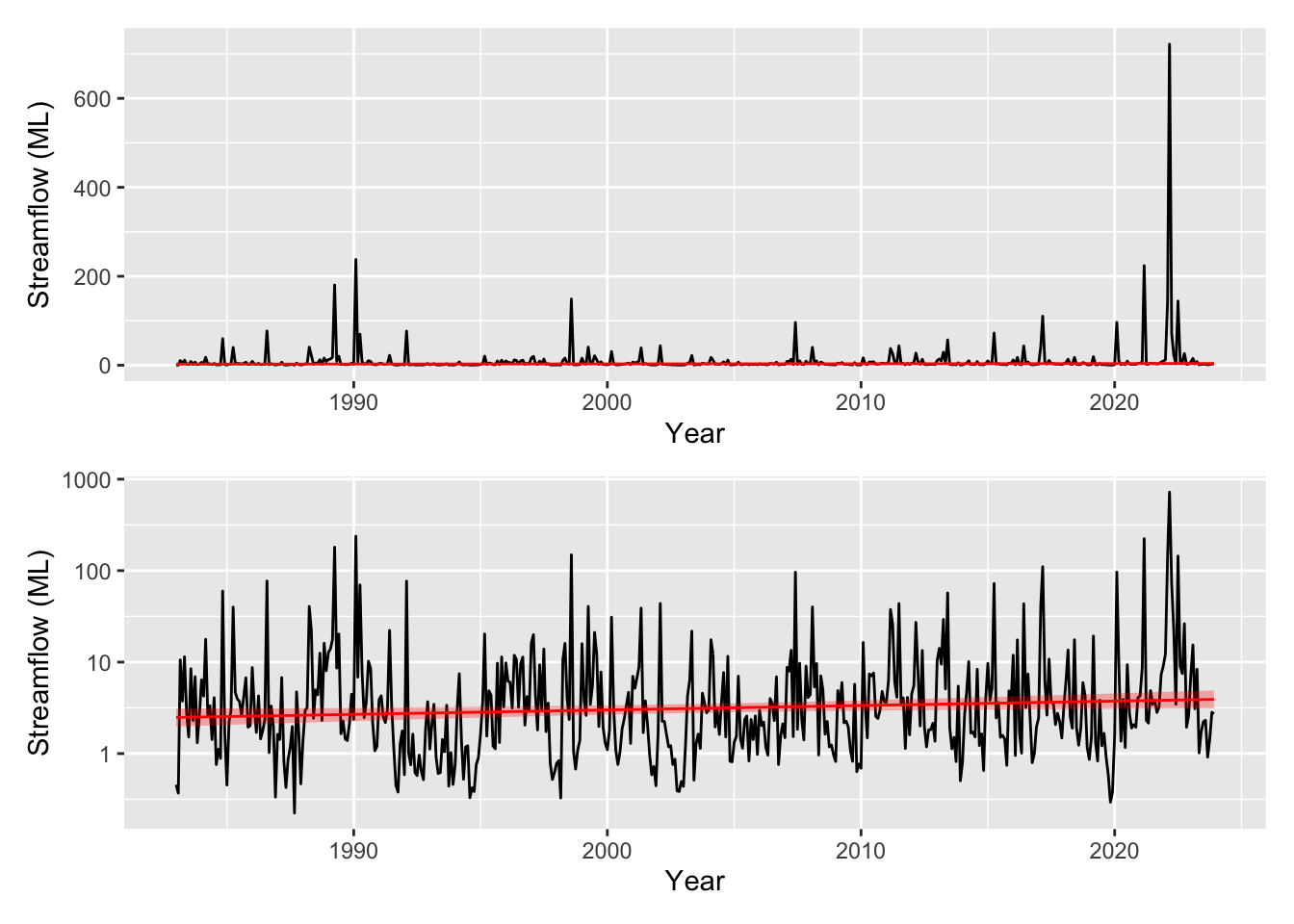

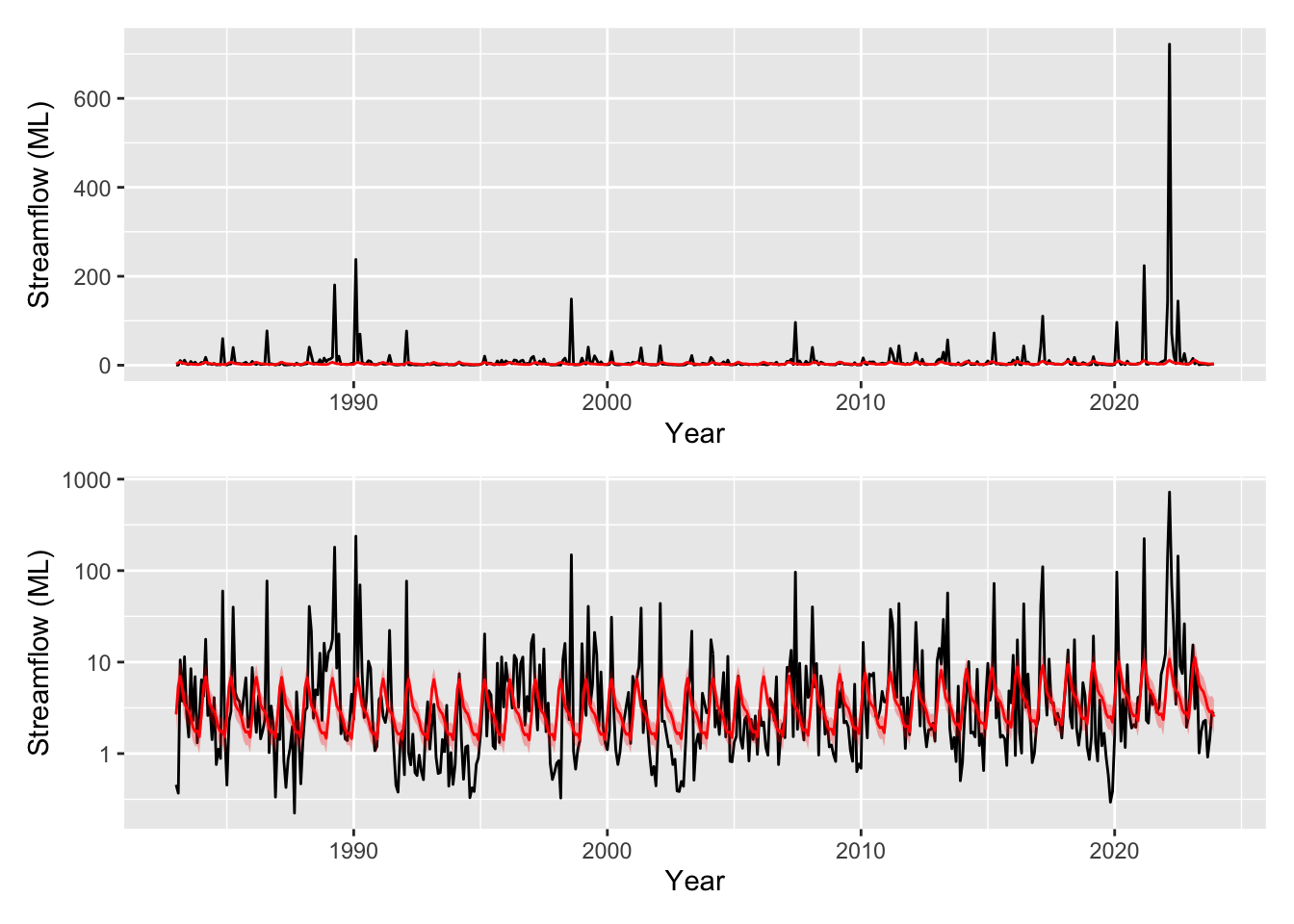

Our streamflow is clearly correlated to rainfall as one might expect however it is unclear the influence of broader climatic variability (in this case represented by SOI) or if there are any other persistent trends in the data beyond the effect of SOI.

3 A good ol’ fashioned linear trend

First lets try with a linear fit to the time variable, this will give us a simple time trend. We supply the gam with a formula (see ?formula.gam) which describes the additive relationship among the covariates and the target variable. In this case we are specifying a simple model:

\[flow = \alpha + \beta_t \cdot time + \epsilon \qquad \epsilon \sim N(0, \sigma^2)\]

# try with a linear fit first

m1 <- gam(

flow ~ time, # the formula specifying the model

data = streamflow_data, # our data.frame

family = gaussian(link="identity") # our family and link function

)

summary(m1) # text summary of the model

Family: gaussian

Link function: identity

Formula:

flow ~ time

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.276 3.567 1.199 0.2312

time 11.748 6.175 1.903 0.0577 .

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

R-sq.(adj) = 0.00531 Deviance explained = 0.733%

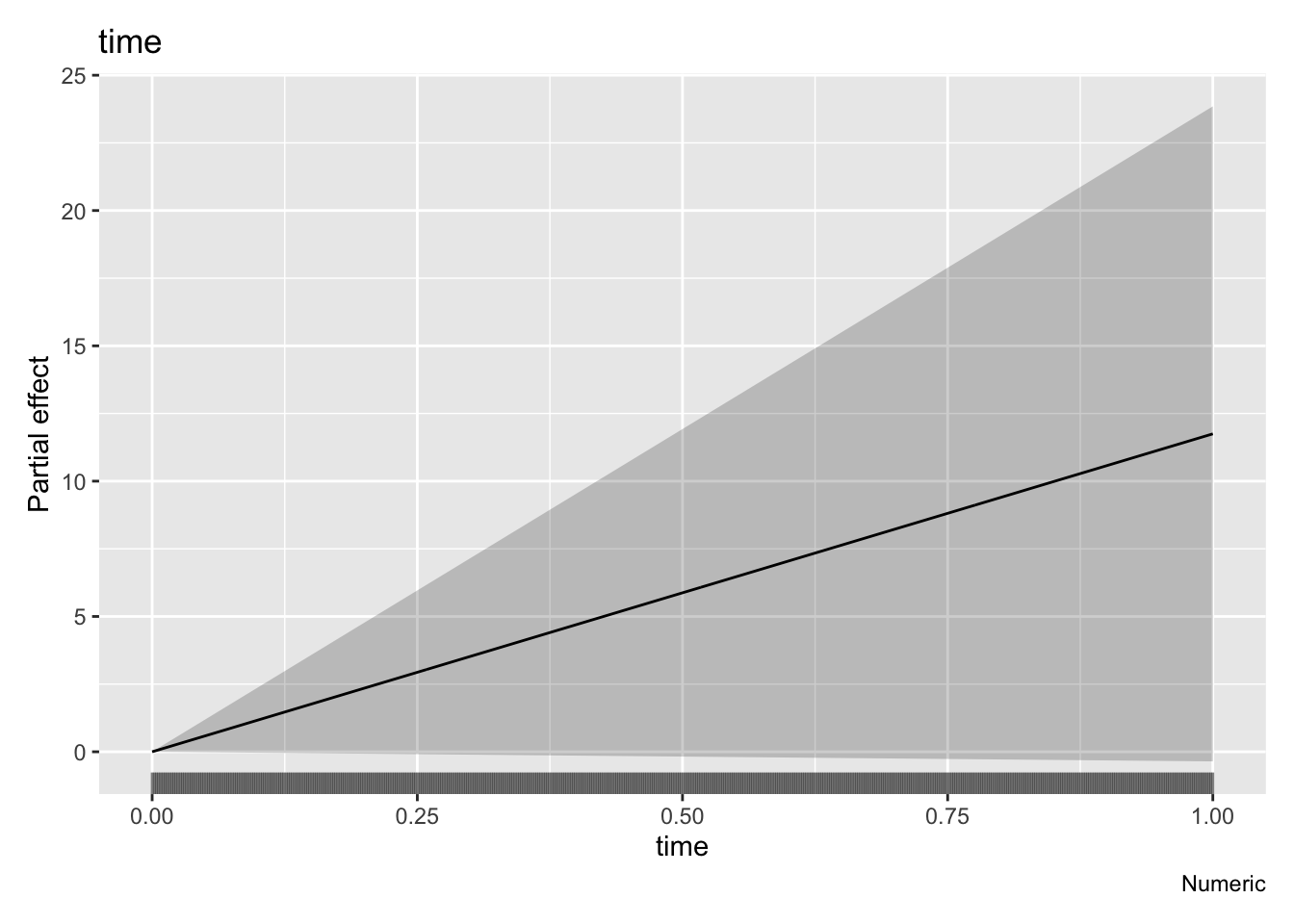

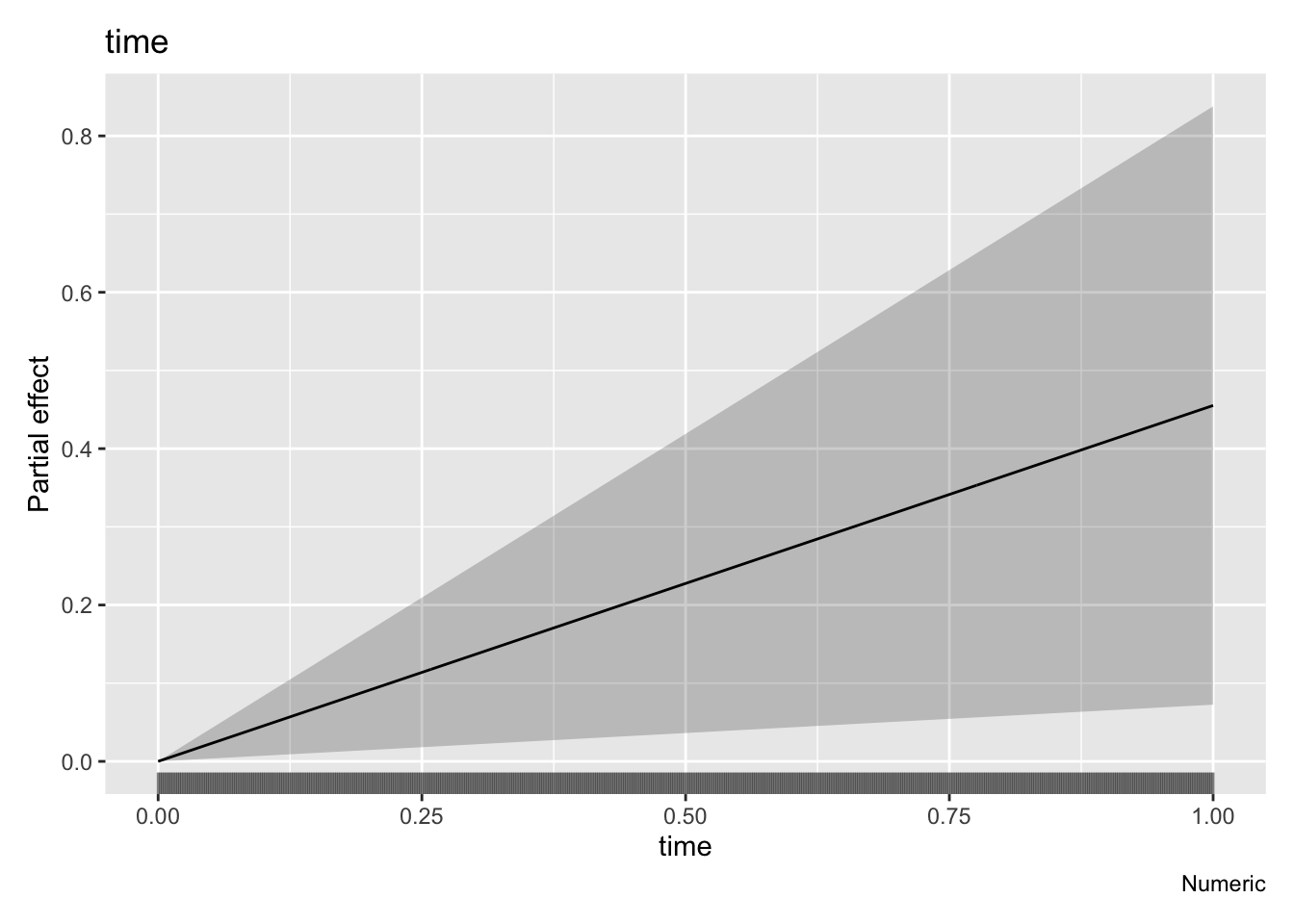

GCV = 1576.1 Scale est. = 1569.7 n = 492draw(parametric_effects(m1)) # plot our linear trend term

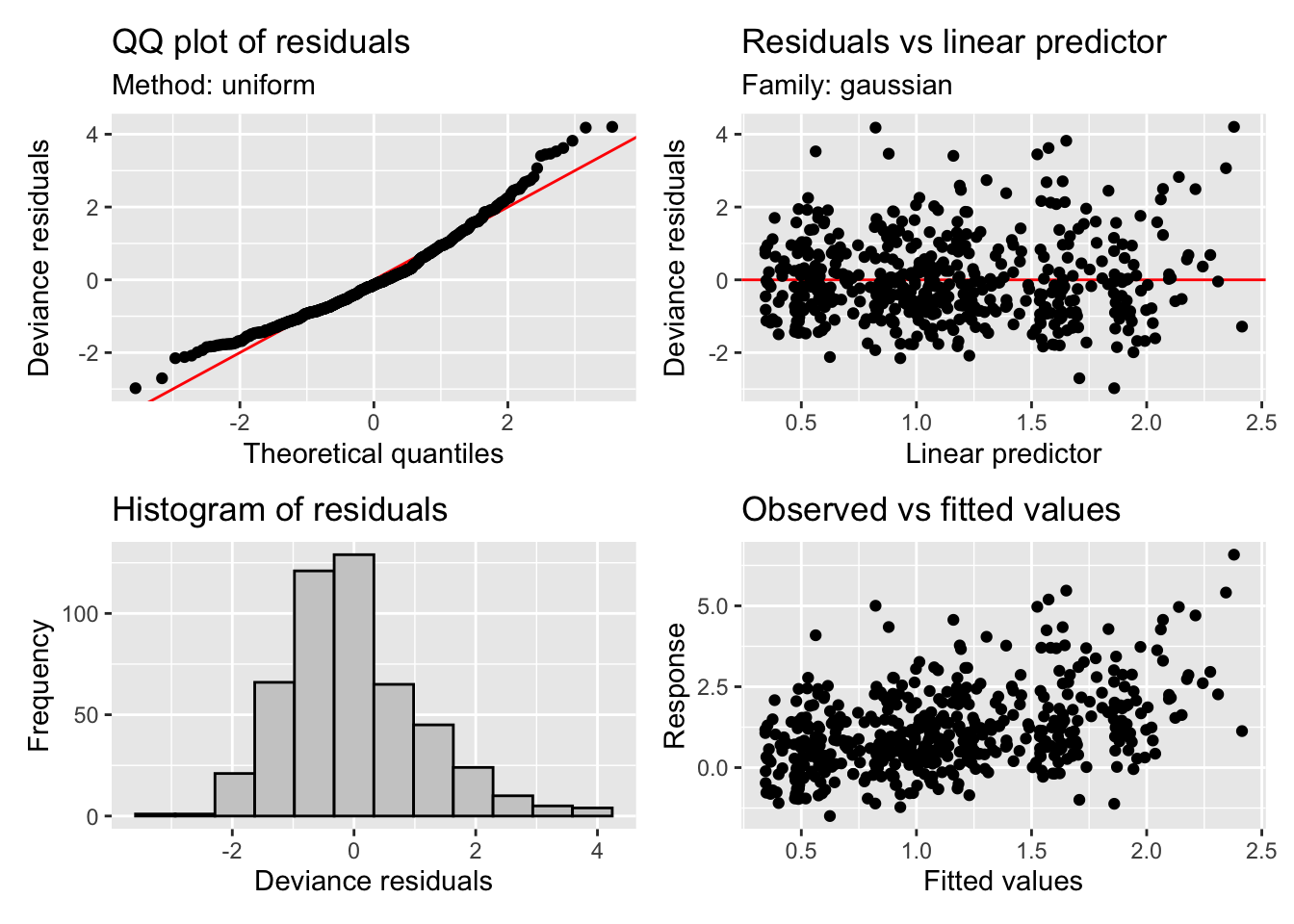

Looking at the estimated linear trend (given by draw(parametric_effects(m1))) there’s a fair bit of uncertainty, and this can also be seen examining the “Parametric coefficients” section of summary(). Lets use the gratia function appraise() to check the residuals of the model.

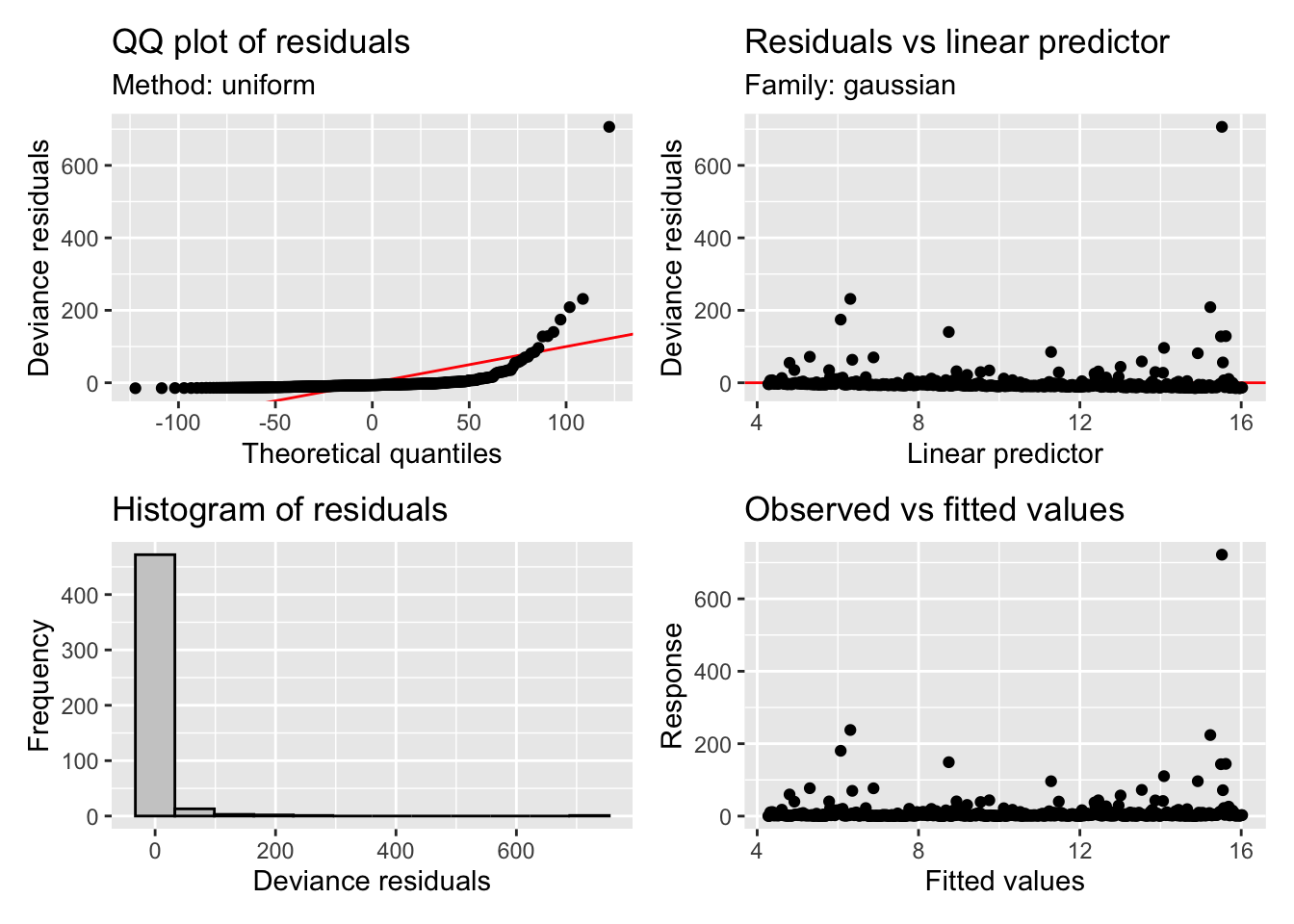

appraise(m1)

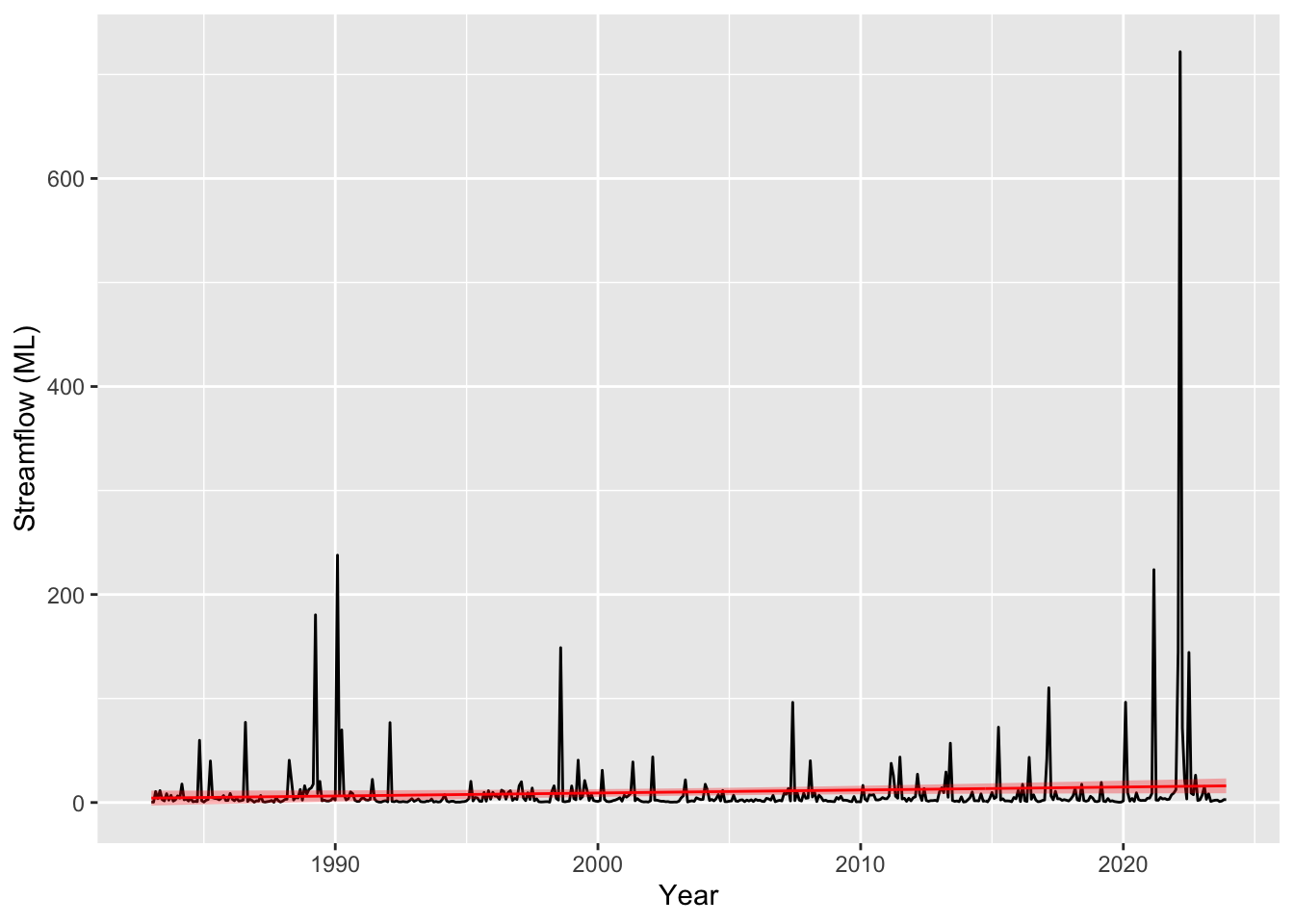

# plot the model prediction against our synthetic streamflow values using a handy function

plot_model_prediction(m1, streamflow_data)

Oh sweet Caroline, those residuals! They are awful to say the least. Our points on the QQ plot should be sitting on the 1:1 line but they are all over the place. You can look at the prediction plot and see why, we have a very large tail as a result of the huge residuals when predicting the “flood” events. Granted we haven’t used any of our covariates yet (namely rainfall which will be the main driver of streamflow), but its still not looking good. If you’re new to these diagnostics you will get more a feel for what these plots are telling you as we continue but if you would like to read more you can check out ?gam.check.

mgcv gives us the option via the family argument (see ?family.mgcv) to specify a range of distributions for the residuals. We have used guassian with an identity link. We could perhaps try a distribution that better describes our residuals, for example a Gamma distribution (Gamma(link="log")) or Tweedie (tw(link="log")) - note both use a log link to transform \(\mu\) into the response space. In GLM terms \(\mu = g^{-1}(X\beta)\) where \(g\) is the link function. This call would be something like:

m2 <- gam(flow ~ time, data = streamflow_data, family = Gamma(link="log"))

Not to over complicate things lets just aim to get our residuals, which are spread over orders of magnitude, on a reasonable scale. Power transforms are commonly used in the hydrological literature (namely box-cox transforms) to address these types of heteroscedasticity issues. So lets model instead the log of the streamflow (log_flow in our streamflow_data data frame) with the same gaussian family (and identity link).

# modelling log_flow

m2 <- gam(

log_flow ~ time,

data = streamflow_data,

family = gaussian(link="identity")

)

# as before

summary(m2)

Family: gaussian

Link function: identity

Formula:

log_flow ~ time

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.9089 0.1128 8.059 5.91e-15 ***

time 0.4552 0.1952 2.331 0.0201 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

R-sq.(adj) = 0.00895 Deviance explained = 1.1%

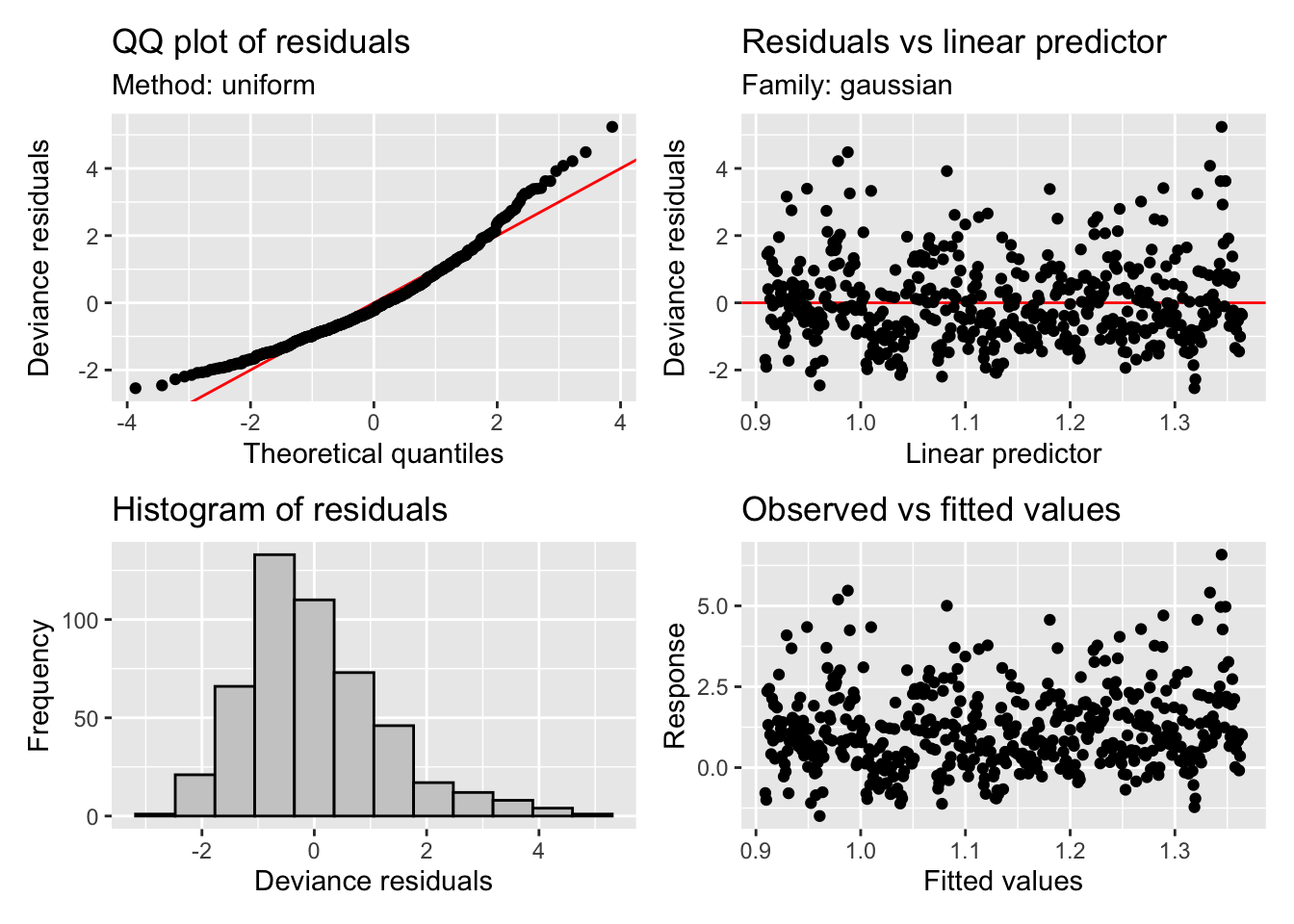

GCV = 1.5756 Scale est. = 1.5692 n = 492appraise(m2)

draw(parametric_effects(m2))

# plot on original and with log10 y scale to better show the fit

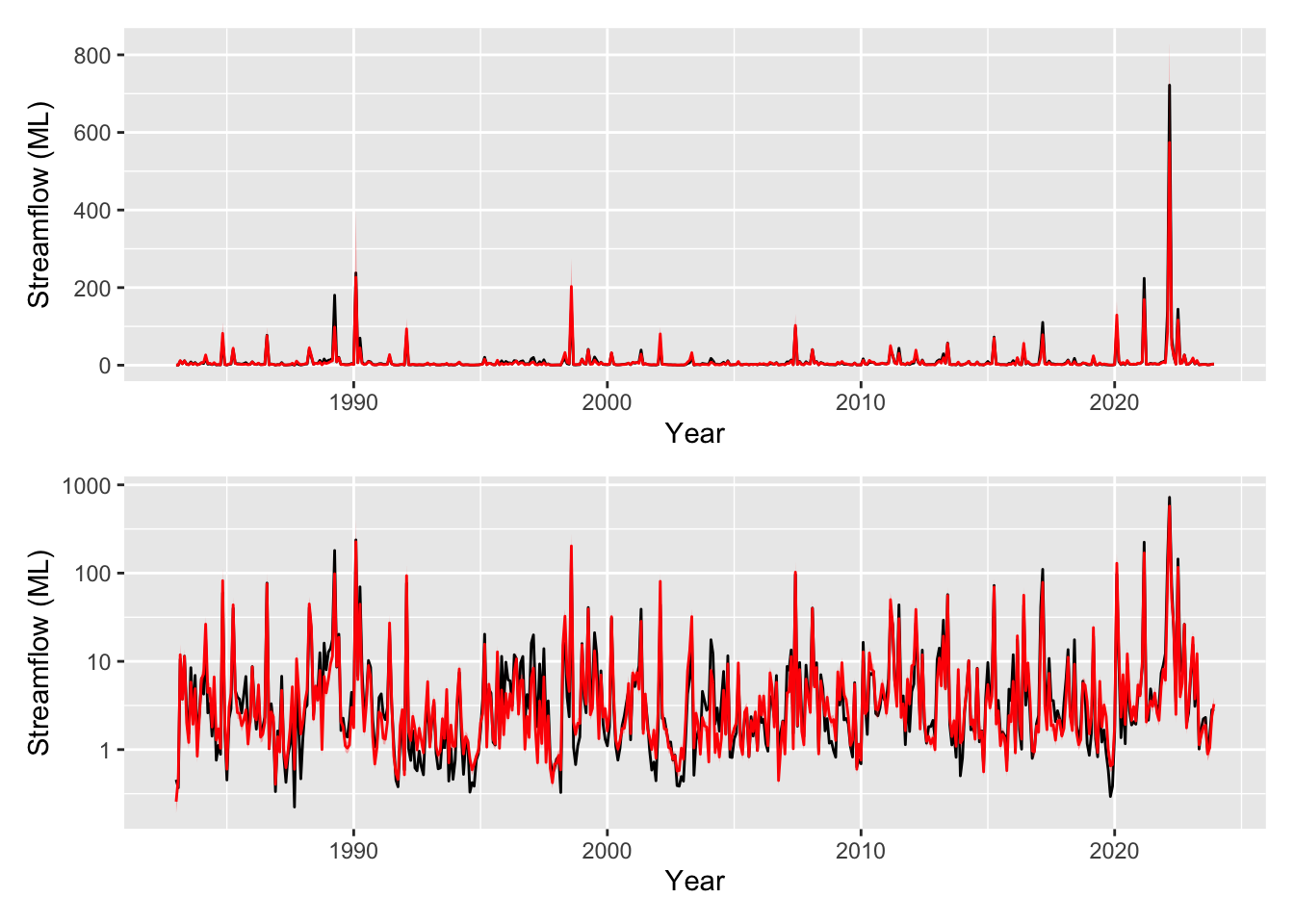

pred_plot <- plot_model_prediction(m2, streamflow_data, exp_bool = T)

wrap_plots(

pred_plot,

pred_plot + scale_y_log10(),

ncol = 1

)

The residuals are looking much much better, we seem to have solved the heteroscedasticity issues, though we still have some large deviances (unsurprising given we are only fitting a linear trend). We have a trend that increases through time now that our model is not being influenced as strongly by the heavy tailed residuals, although our uncertainty bands are again large. However, mgcv calculated significance of the smooth terms is starting to indicate that the smooth may be significant - scraping in under the mystical p<0.05 threshold with a cool 0.0201 (see the summary() output).

4 Stepping it up with a smooth on time

We are now going to get a bit more adventurous and will introduce a smooth on time (s(time)). This means diving into the complexities of a nonlinear and flexible fit to the time trend. For the sake of brevity we will start to introduce some of the other covariates we expect to be influencing the streamflow too - in this case a seasonal component which fits a spline based on the month of the year s(moy). The equation is not pretty but lets keep it as close to the mgcv call as possible:

\[log\_flow = \alpha + f\left(time\right) + f\left(moy\right) + \epsilon \qquad \epsilon \sim N(0, \sigma^2)\]

m3 <- gam(

log_flow ~ s(time) + s(moy),

data = streamflow_data,

family = gaussian(link="identity")

)

summary(m3)

Family: gaussian

Link function: identity

Formula:

log_flow ~ s(time) + s(moy)

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.13651 0.05199 21.86 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Approximate significance of smooth terms:

edf Ref.df F p-value

s(time) 2.075 2.590 4.568 0.00635 **

s(moy) 6.147 7.309 11.657 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

R-sq.(adj) = 0.16 Deviance explained = 17.4%

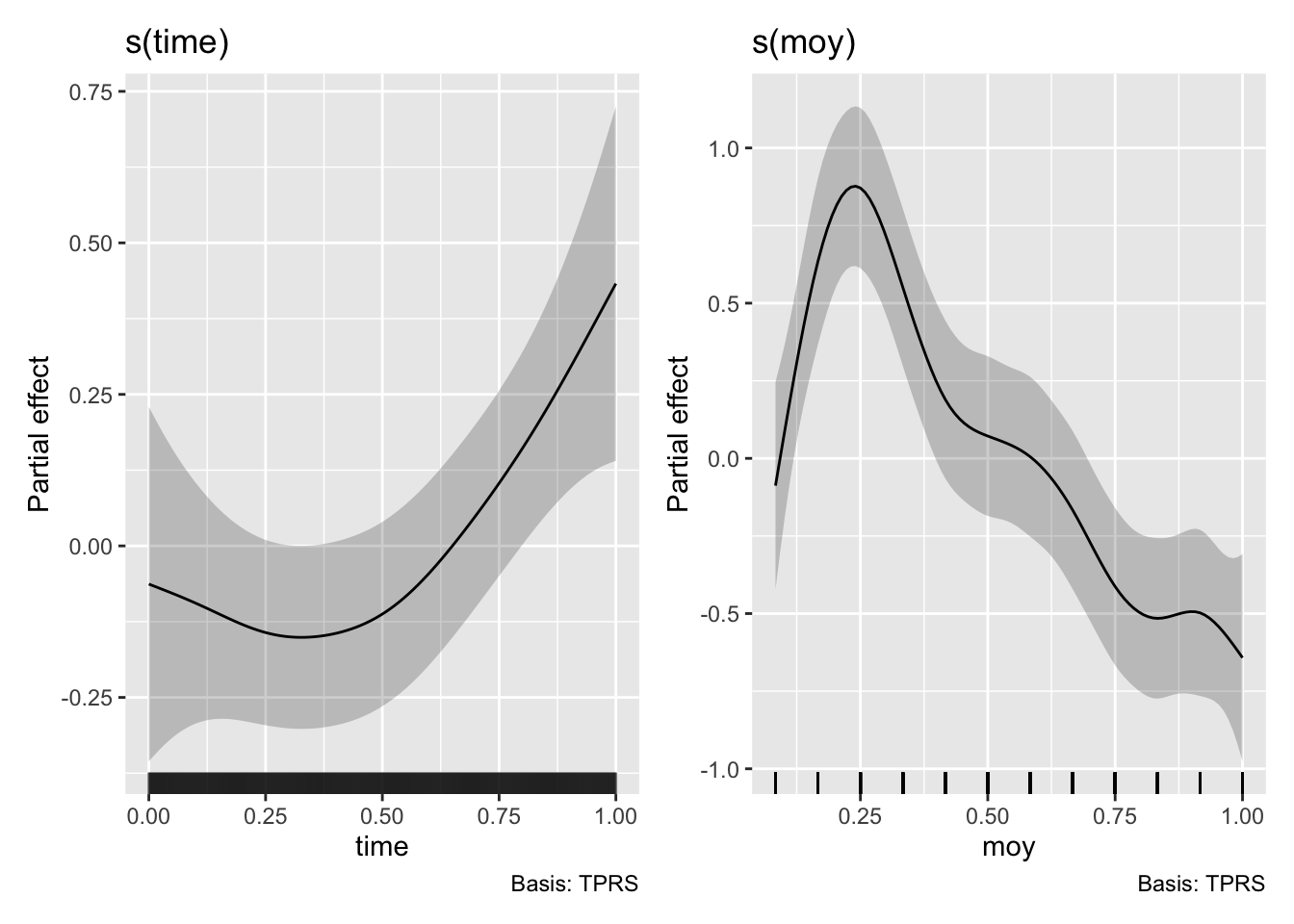

GCV = 1.3553 Scale est. = 1.3299 n = 492appraise(m3)

# now that we are drawing smooths we can use the `draw` function from `gratia`

draw(m3)

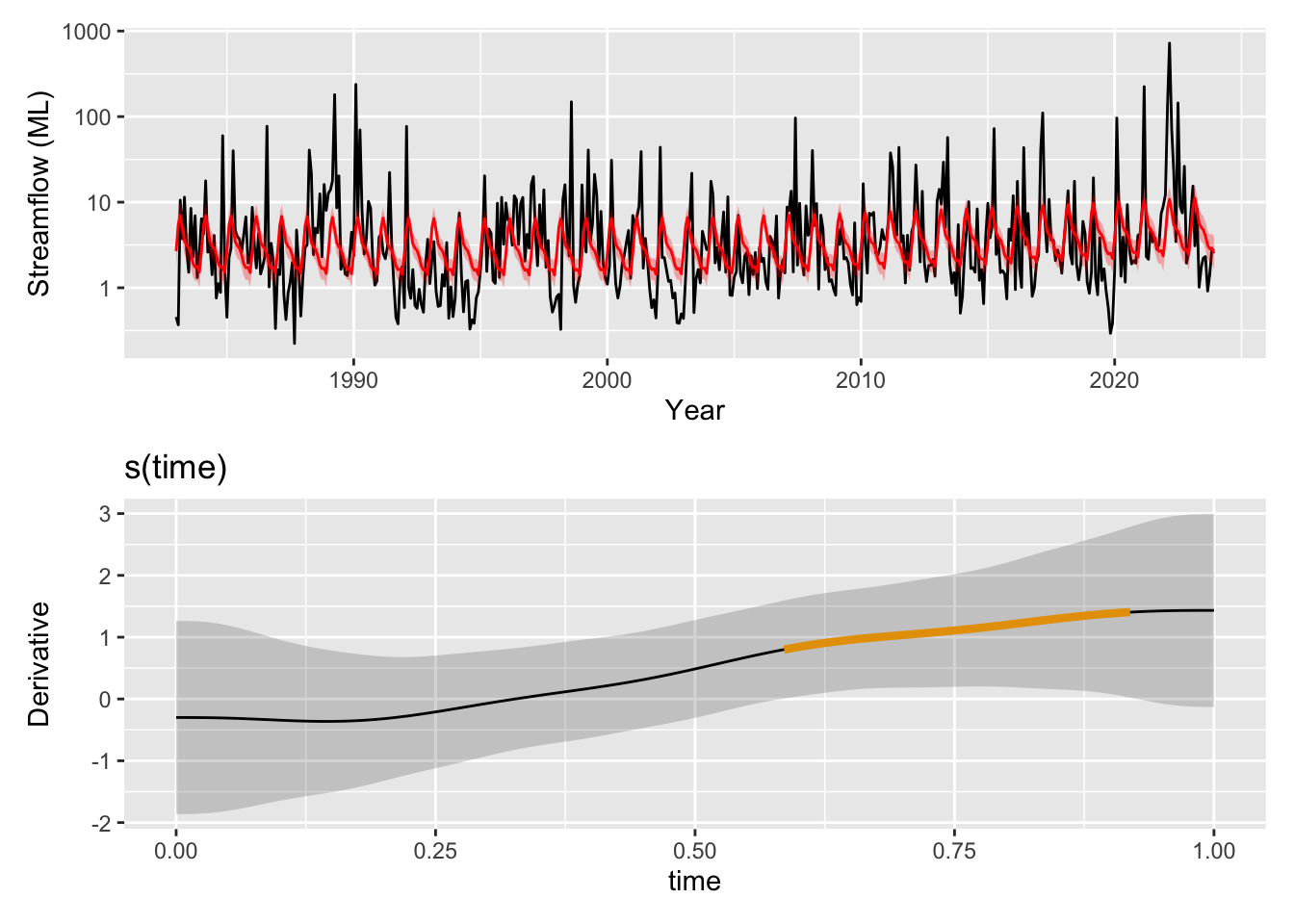

pred_plot <- plot_model_prediction(m3, streamflow_data, exp_bool = T)

wrap_plots(

pred_plot,

pred_plot + scale_y_log10(),

ncol = 1

)

You can see we have now have a model with much improved residuals given we are now starting to explain some of the variability in the data (R-sq.(adj) = 0.16). The seasonal component shows variability in the flow that peaks around March and troughs in the late part of the year. This can be seen in the prediction plot too where we have some varibility on top of the trend. However we also see something strange, the effect of moy is noticeably different from the end of December to the beginning of January. We can’t blame the model, its just cranking the handle and doing its very best. But we can use some prior knowledge to ensure the model is coherent and thus be more confident in our results. There are many types of splines we can use in mgcv (see ?smooth.terms). We wont go into them all here, but we will make use of a cyclic spline (bs="cc") which is specifically designed to ensure the ends are penalised to match, i.e., there should be no large discontinuity in the seasonal component. We will adopt this fix in the models below and you can check out the fixed smooth below.

# gratia has a function derivatives() so that we can see how the smooth is changing and how certain we are that it is increasing or decreasing. You can use select="s(time)" in both this and the draw(m3) function above to plot a single smooth.

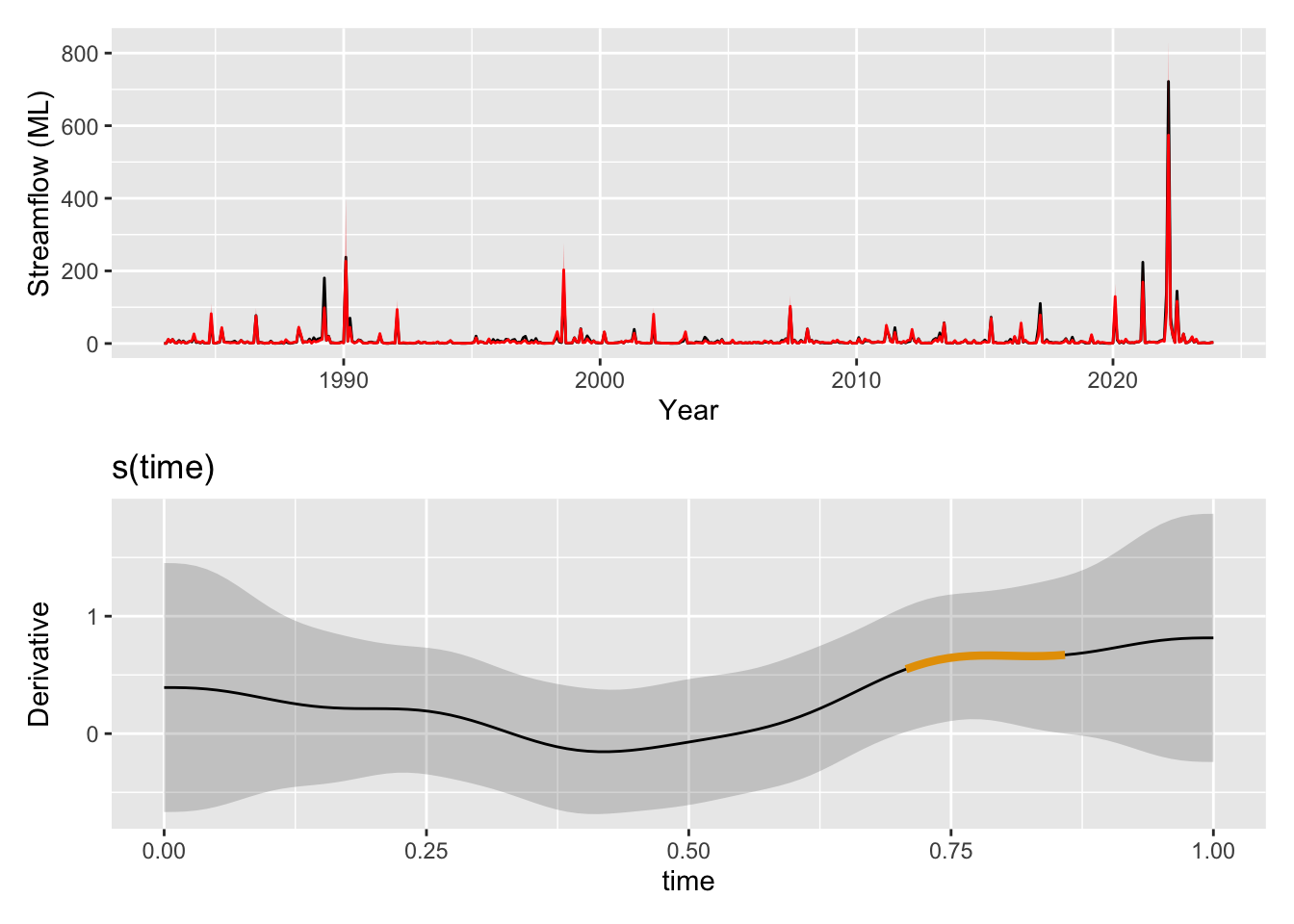

wrap_plots(

pred_plot + scale_y_log10(),

draw(derivatives(m3, select="s(time)"), add_change = TRUE, change_type = "sizer"),

ncol = 1

)

The time trend itself is picking up now some of the broader patterns with periods of higher streamflow around 1990 and in the 2020s. Time is once again identified as significant by mgcv (p = 0.00635). One question we could ask is if the trend is increasing or decreasing at any point in time. We can look at the derivatives at each point along the time smooth and determine signficant periods as those where the uncertainty bands are either both above or both below zero indicating the model is confident in s(time) increasing or decreasing, respectively. When we use gratia::derivatives() to look for significant periods of change in this trend we see that the model is showing that the magnitude of this trend was very likely increasing through the 2010s.

4.1 Here comes the rain

We still haven’t explored what we could strongly suspect to be the main driver of streamflow variability: rainfall-runoff processes. We expect this to have some nonlinearity, clearly we are grossly simplifying the processes in the catchment, in particular with respect to groundwater interactions. Of course to let you in on a secret, in this example we have employed nonlinearity for this term when creating our synthetic data. So we add a smooth on monthly rainfall (s(rain)).

In addition we add in the impact of longer term climatic variability on the streamflow. In this dataset we capture this through the Southern Oscillation Index (SOI) which we interpret as the effect of longer periods of wet and dry on the catchment which contribute to the overall streamflow signal. For example, we might expect say soil moisture to be lower after extended dry periods leading to less streamflow for a given amount of rainfall compared to times with waterlogged soil. Here we introduce another smooth on this term.

m4 <- gam(

log_flow ~ s(time) + s(moy, bs="cc") + s(rain) + s(SOI),

data = streamflow_data,

family = gaussian(link="identity")

)

summary(m4)

Family: gaussian

Link function: identity

Formula:

log_flow ~ s(time) + s(moy, bs = "cc") + s(rain) + s(SOI)

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.13651 0.01954 58.15 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Approximate significance of smooth terms:

edf Ref.df F p-value

s(time) 3.151 3.905 3.56 0.00723 **

s(moy) 6.570 8.000 30.33 < 2e-16 ***

s(rain) 3.160 3.973 592.26 < 2e-16 ***

s(SOI) 3.034 3.832 97.17 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

R-sq.(adj) = 0.881 Deviance explained = 88.5%

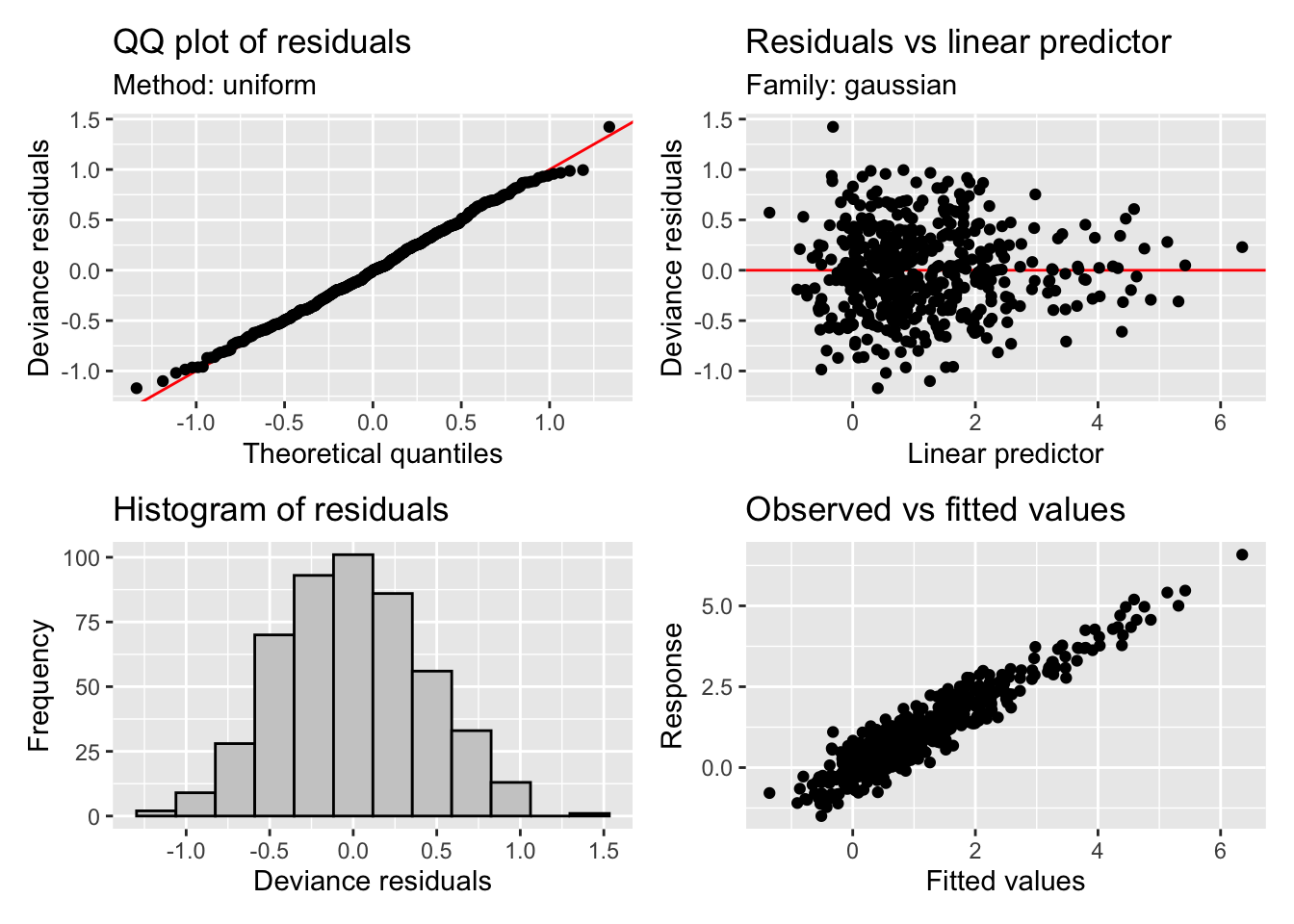

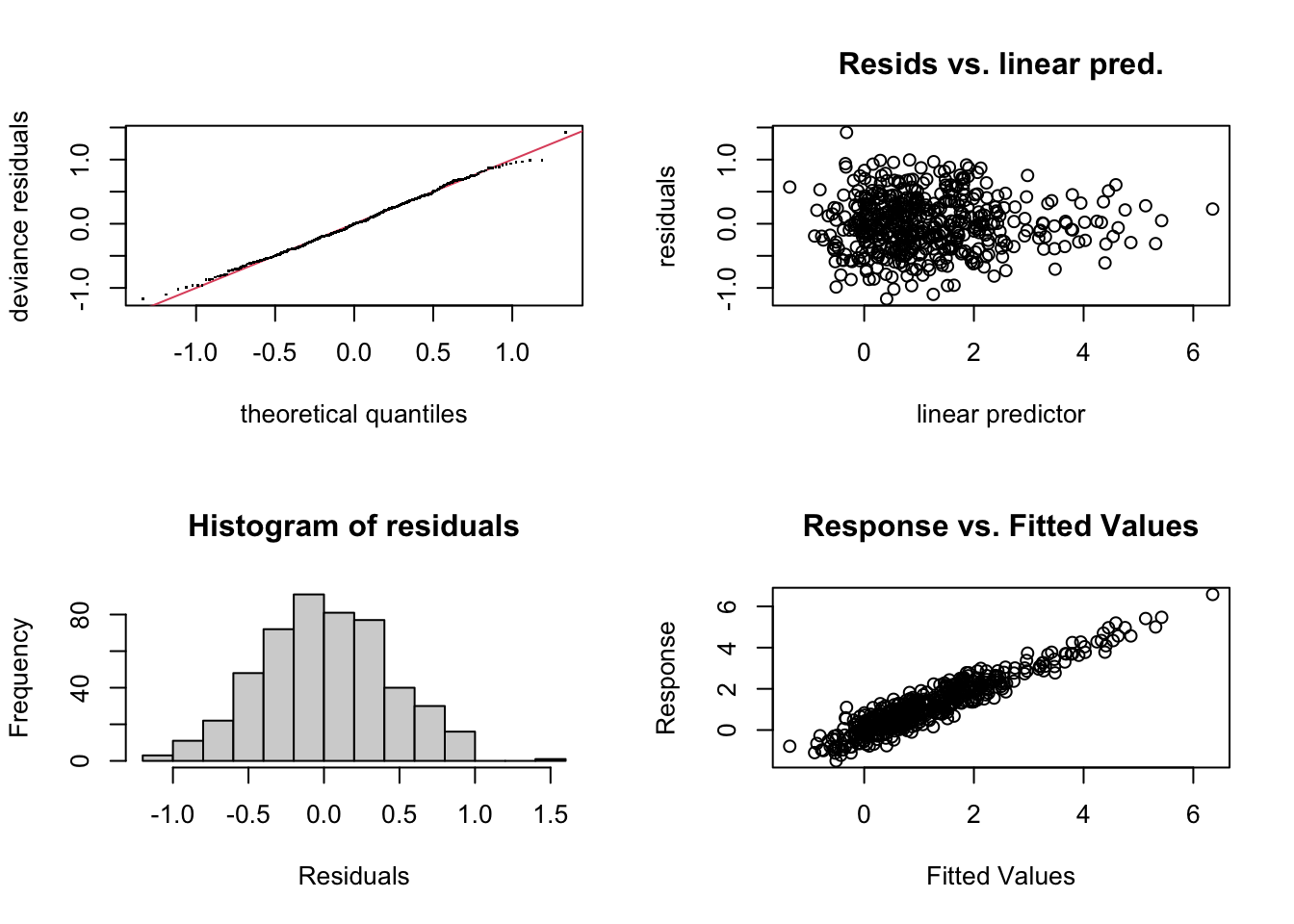

GCV = 0.19462 Scale est. = 0.18793 n = 492appraise(m4)

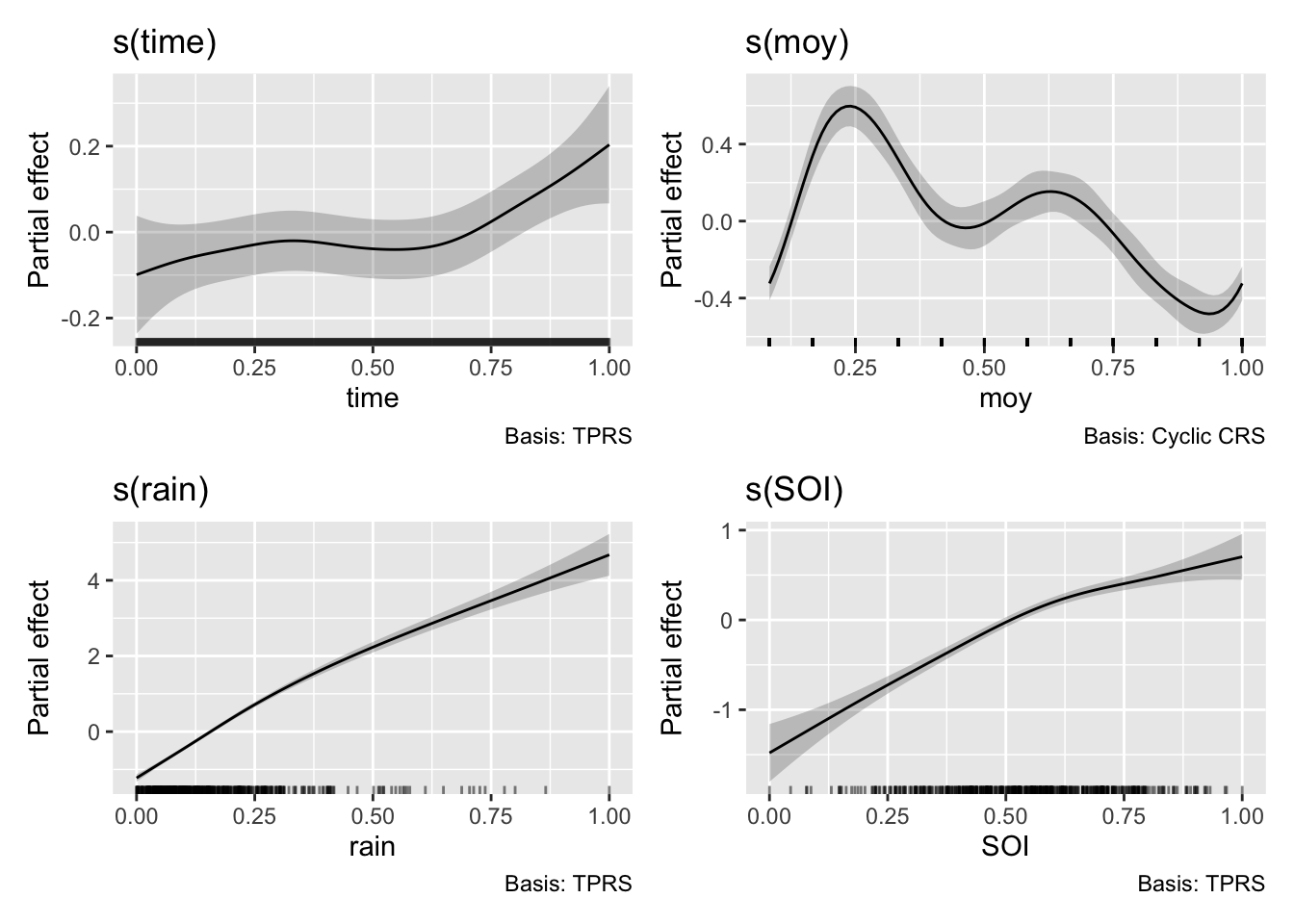

draw(m4)

pred_plot <- plot_model_prediction(m4, streamflow_data, exp_bool = T)

wrap_plots(

pred_plot,

pred_plot + scale_y_log10(),

ncol = 1

)

wrap_plots(

pred_plot,

draw(derivatives(m4, select="s(time)"), add_change = TRUE, change_type = "sizer"),

ncol = 1

)

We have a really good fit now, we are explaining a lot of the variance (R-sq.(adj) = 0.881). We have some interesting and realistic looking smooths on the new variables of rainfall and SOI with low uncertainty. The SOI smooth looks reasonable, during periods of high SOI (La Niña) we might expect wetter conditions compared to periods of low SOI (El Niño). The smooths are all determined to be significant by mgcv but note our time smooth has changed from m3, though again with p=0.00723.

But all is not well with our time smooth. We use the gam.check() diagnostic to check our model. Most of the plots will be familiar from our previous dalliance with the appriase() function. However, we also have additional information on the complexity of the smooths. Briefly, by increasing the number of knots available for a spline to fit data, we can increase the ability of the function to take on very complex shapes. The basis dimension checking (see the returned text) is returning that something is off about the s(time) smooth. It’s indicating that we may need to increase the number of maximum knots in the smooth above the default k=10 to fully explore the space of possible function fits. You can see ?choose.k for more information on this diagnostic and we will explore the implications of this below.

gam.check(m4)

Method: GCV Optimizer: magic

Smoothing parameter selection converged after 6 iterations.

The RMS GCV score gradient at convergence was 8.345812e-07 .

The Hessian was positive definite.

Model rank = 36 / 36

Basis dimension (k) checking results. Low p-value (k-index<1) may

indicate that k is too low, especially if edf is close to k'.

k' edf k-index p-value

s(time) 9.00 3.15 0.23 <2e-16 ***

s(moy) 8.00 6.57 1.04 0.80

s(rain) 9.00 3.16 0.95 0.14

s(SOI) 9.00 3.03 0.97 0.21

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 15 Wiggliness (the technical term)

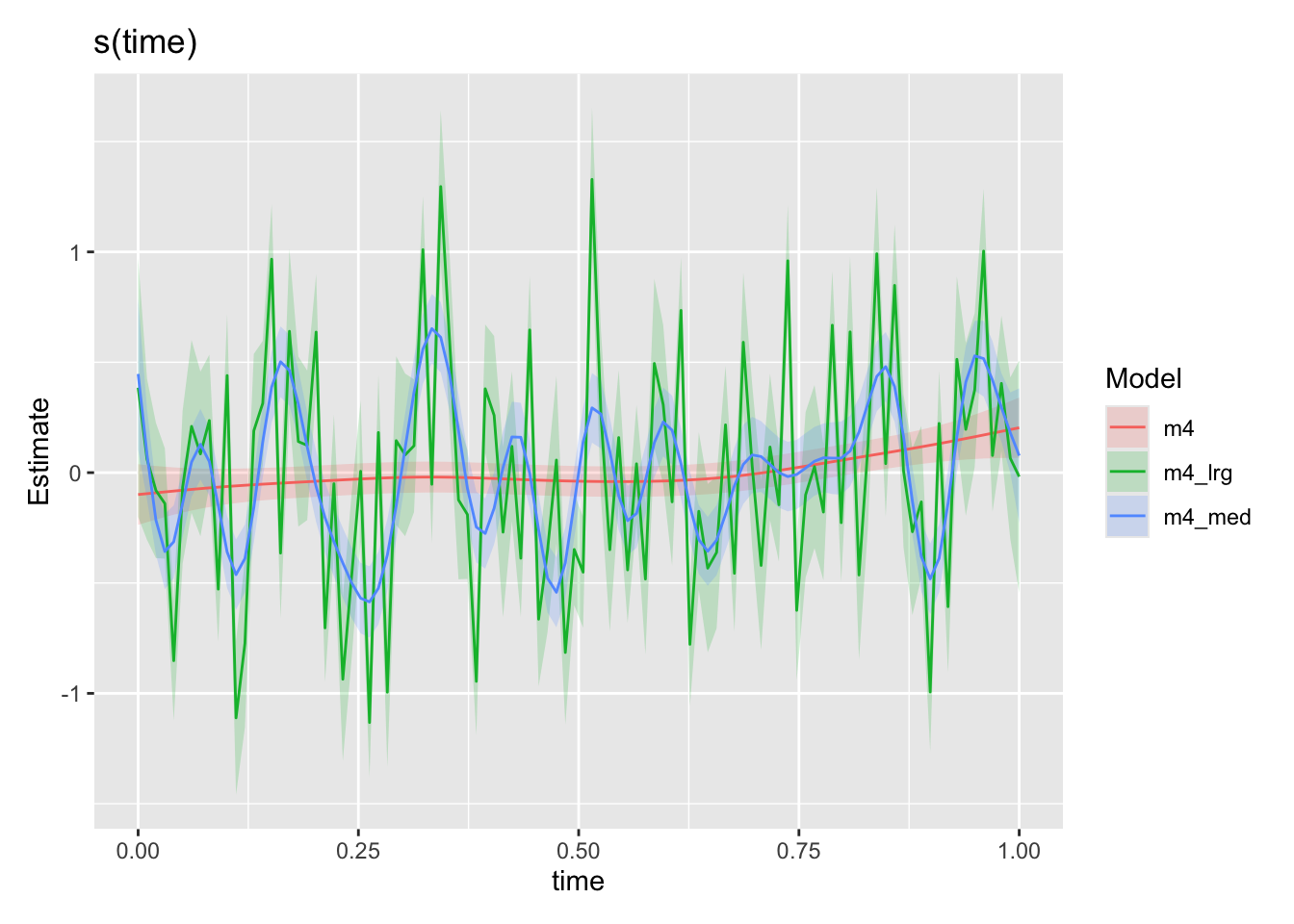

Below we look at the effects of increasing the maximum number of knots in the time smooth. Smooths have of course a penalty term which controls the “wiggliness” of the smooth as it attempts to fit the data. However, there is still some trade-off allowed between the complexity of the smooth and goodness of fit. By increasing k, we allow the model to explore a broader range of possible fits to the data and find that there are some fits to the data that better navigate that trade-off. Thus the smooth changes as we increase k.

m4_med <- gam(

log_flow ~ s(time, k=30) + s(moy, bs="cc") + s(rain) + s(SOI),

data = streamflow_data,

family = gaussian(link="identity")

)

m4_lrg <- gam(

log_flow ~ s(time, k=100) + s(moy, bs="cc") + s(rain) + s(SOI),

data = streamflow_data,

family = gaussian(link="identity")

)

draw(compare_smooths(

m4, m4_med, m4_lrg, select = "s(time)"

))

But are these trends really realistic? What are the other possible explanations for this highly variable time trend? One of the common afflictions of timeseries data is autocorrelation and particularly for us problems can arise when the residuals are not independent (i.i.d.). We could for instance imagine a flood event with a duration that spans multiple data points leading to correlated residuals in time if our model consistently under predicts an event perhaps. Or perhaps there are storages in the system which could result in extented periods of increased baseflow after an event occurs. Here we model on the monthly scale so perhaps in non-synthetic data it would be less of a problem, but we could still imagine processes on medium timescales leading to autocorrelation in the residuals of our imperfect model.

What makes it particularly bad in this situation, however, is that we have a very flexible term (s(time)) which is able to absorb some of this autocorrelation. If residuals were purely independent it wouldn’t be a problem as only having a number of knots close to/equal to the number of observations would really drive these residuals into our time smooth. With autocorrelated residuals though, the model picks up these patterns and does what it does best: fits them using any and every means available to it. Lets not blame the model, its really trying its very best. But this process means that our flexible smooth on time can absorb/fit these patterns if we give it sufficient flexibility (as in the k=30, 100 cases). Lets do a check for autocorrelation in the residuals.

# acf(residuals(m4, type="response"))

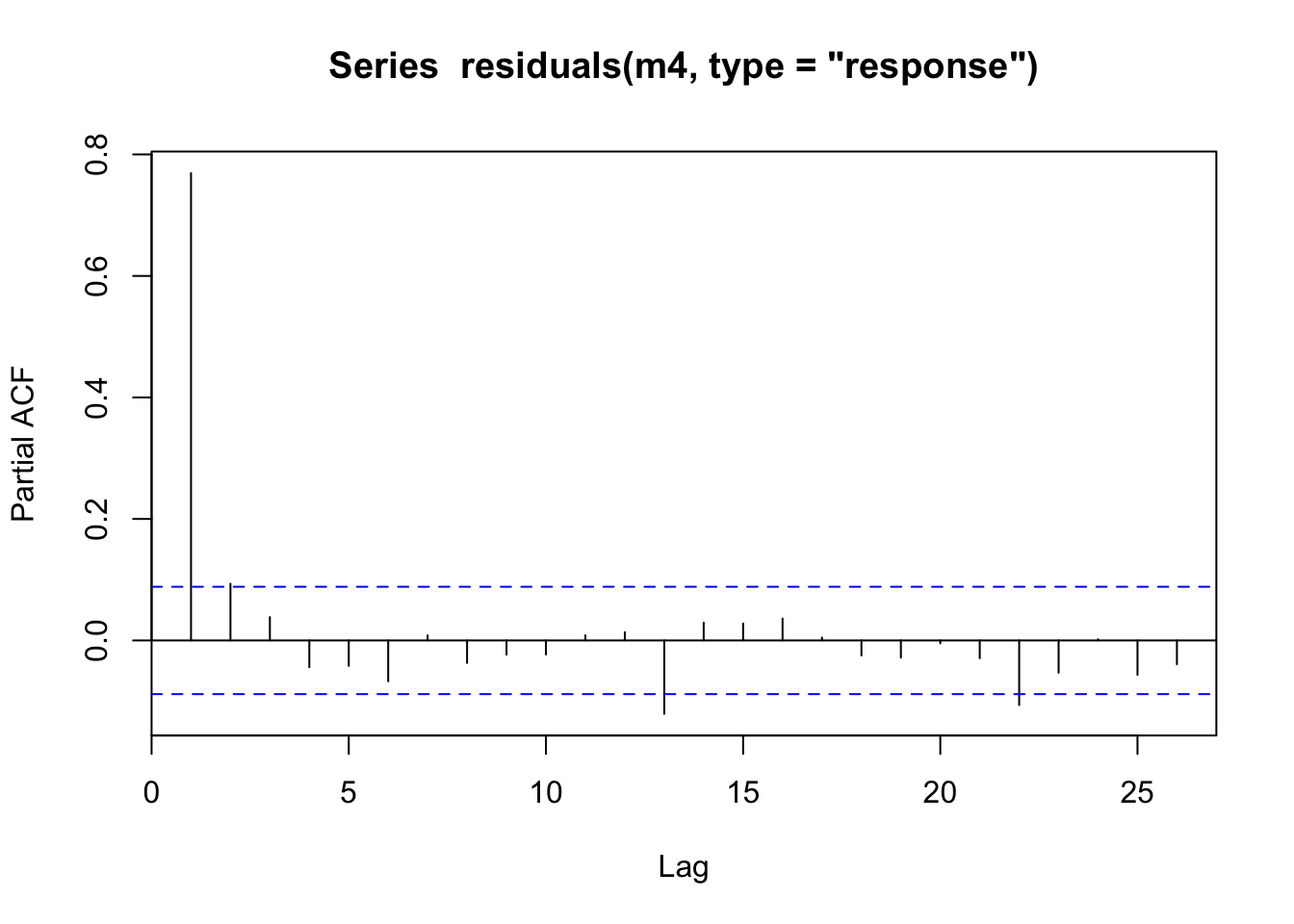

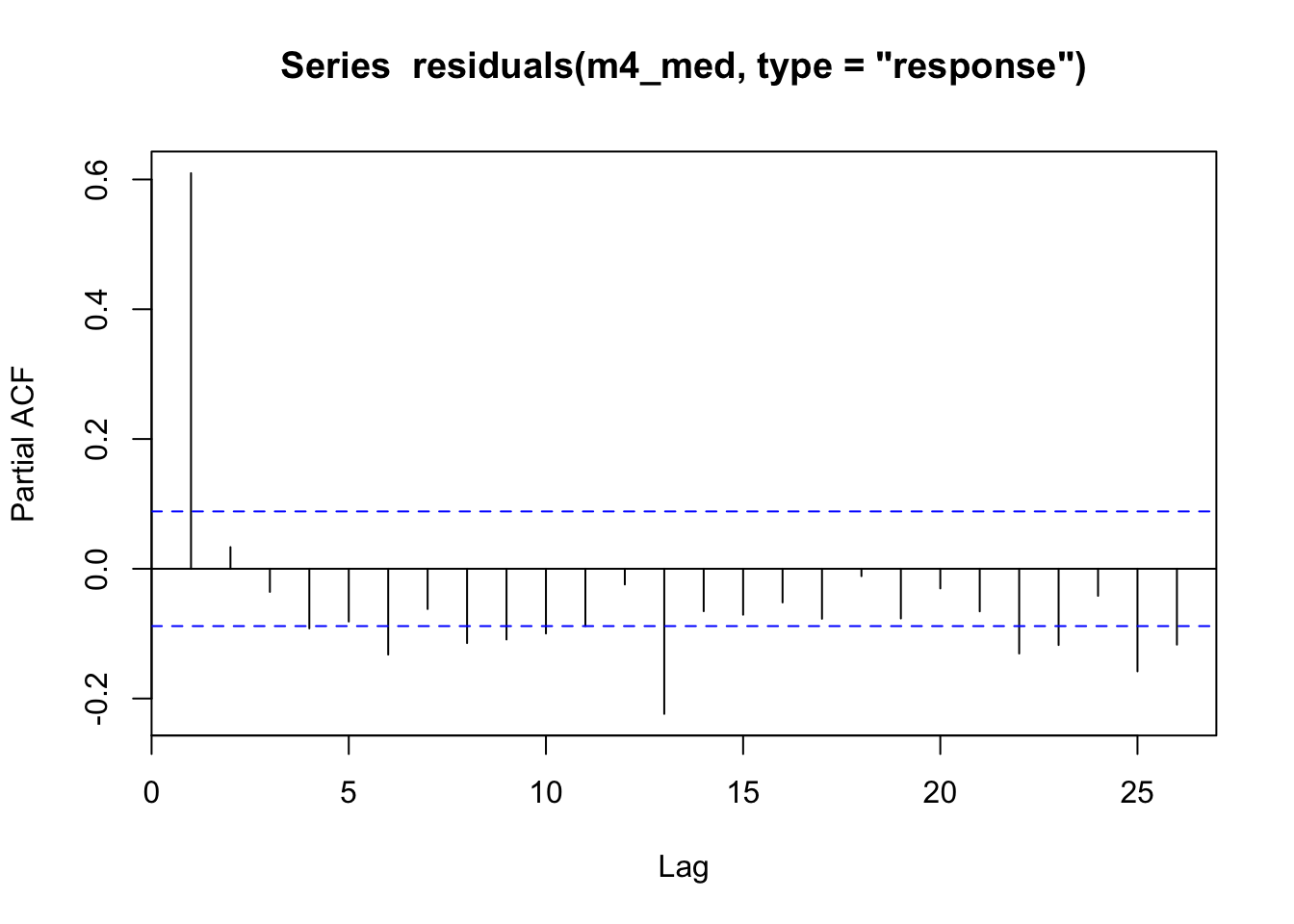

pacf(residuals(m4, type="response"))

pacf(residuals(m4_med, type="response"))

Looking at the residuals for model m4 we can see there is large autocorrelation in the residuals and in model m4_med we can see this still present but partially absorbed by our much higher complexity s(time). The partial autocorrelation function (PACF) tends to indicate the highest effect is via an AR1 process (high correlation at lag = 1). See ?acf for more details on pacf vs acf.

6 Facing down autocorrelation

So how can we deal with this? Well up until this point we have been using the gam function in mgcv, and we will find no help there. However, we can look to the gamm function which fits a Generalized Additive Mixed Model (GAMM). Again this post isn’t designed to be a textbook on GAM(M)s, so practically for us it means that we can use lme correlation structures to model the residuals. This means we can tell the model to expect AR1 correlation residuals and account for these when fitting (instead of heaping these patterns into our precious time smooth). Specifically we will use the correlation argument and specify corAR1() (see ?corClasses). Lets take a look shall we.

# fit again a model with a large number of knots for time, but now with AR1 residuals

m5 <- gamm(

log_flow ~ s(time, k=100) + s(rain) + s(moy, bs="cc") + s(SOI),

data = streamflow_data,

method = "REML",

correlation = corAR1(), # our correlation structure

family = gaussian(link="identity")

)

summary(m5$gam)

Family: gaussian

Link function: identity

Formula:

log_flow ~ s(time, k = 100) + s(rain) + s(moy, bs = "cc") + s(SOI)

Parametric coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.14010 0.06239 18.27 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Approximate significance of smooth terms:

edf Ref.df F p-value

s(time) 1.000 1.000 0.957 0.328

s(rain) 4.520 4.520 2306.608 <2e-16 ***

s(moy) 7.678 8.000 43.575 <2e-16 ***

s(SOI) 4.835 4.835 102.059 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

R-sq.(adj) = 0.873

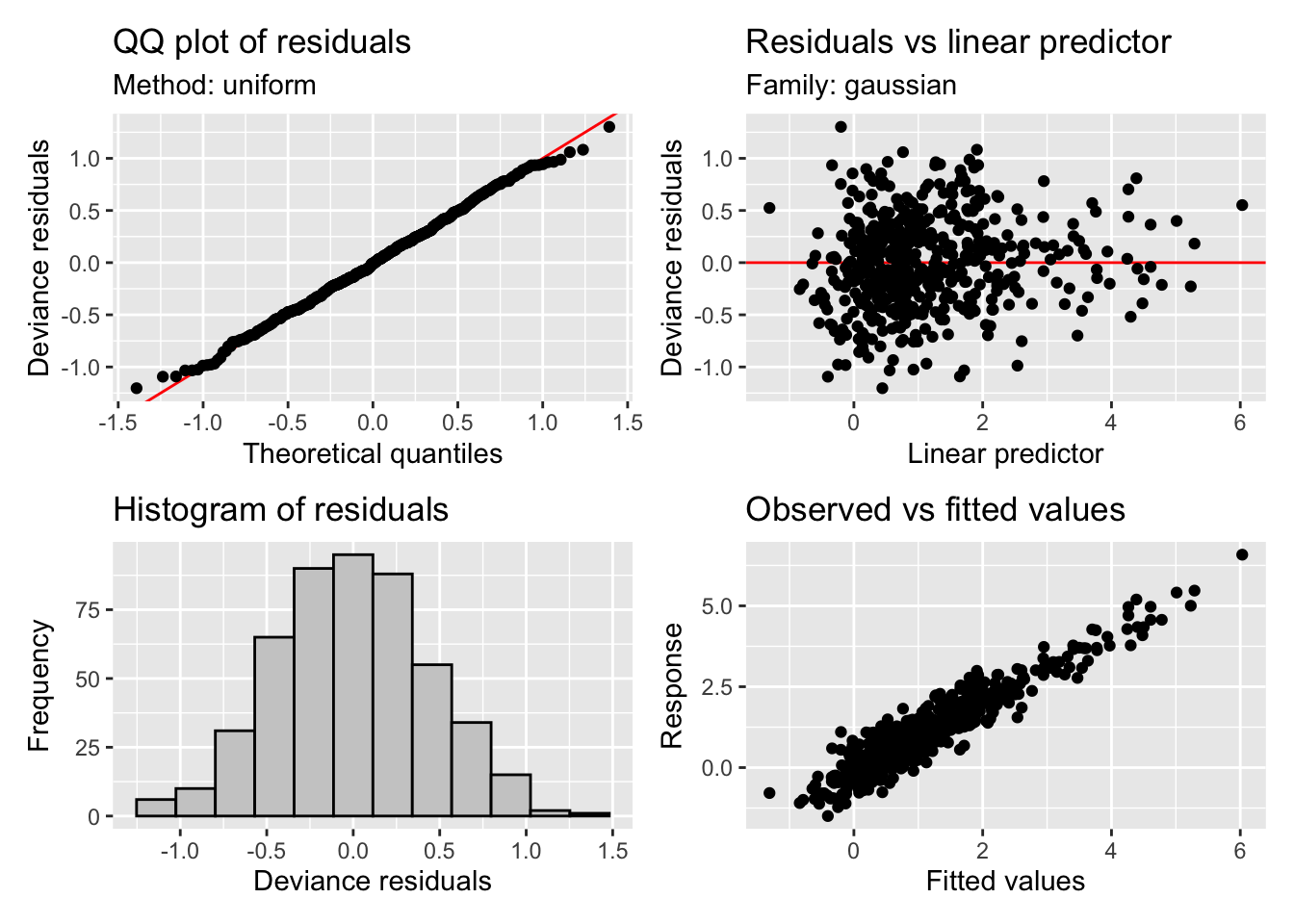

Scale est. = 0.20355 n = 492appraise(m5$gam)

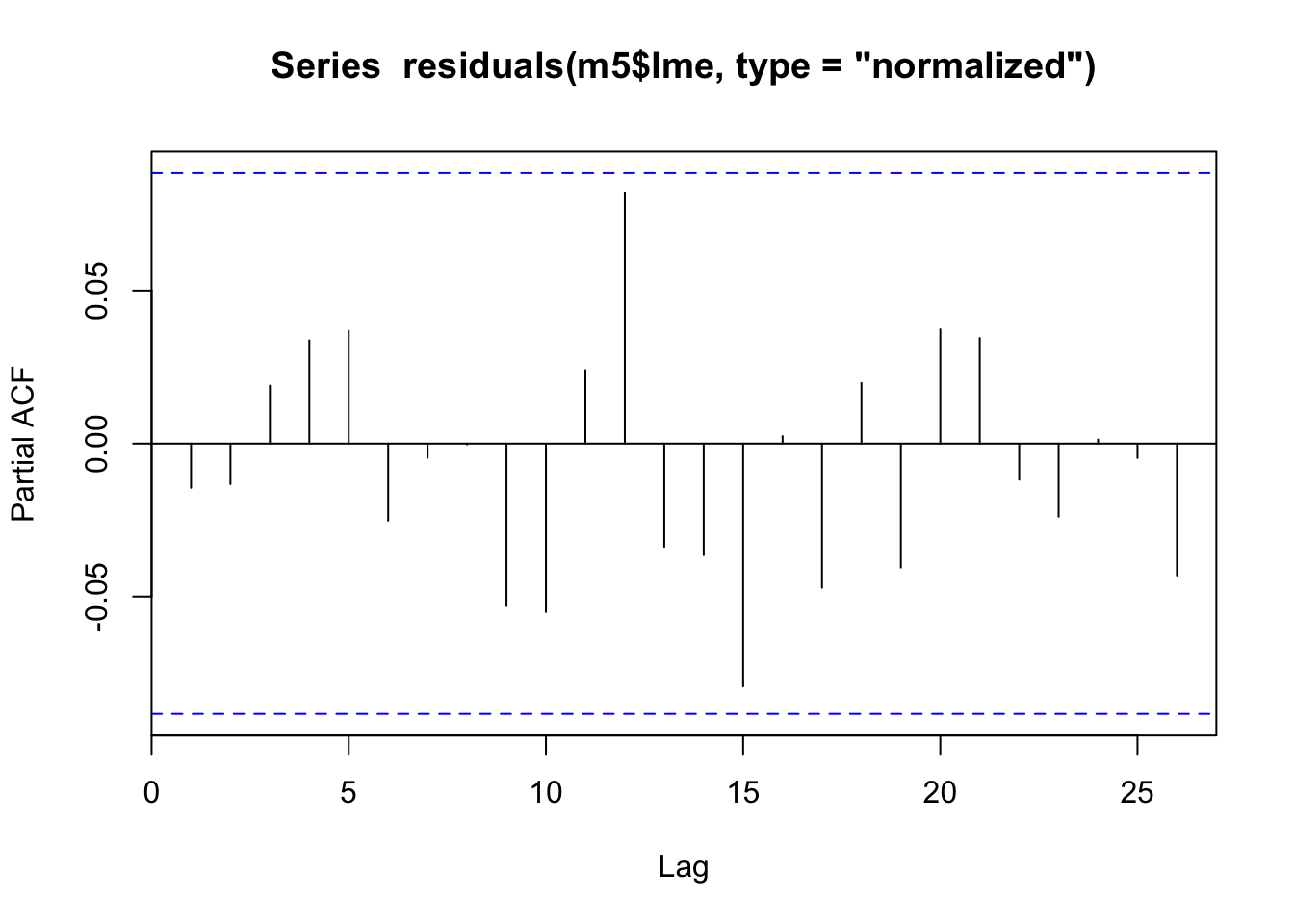

pacf(residuals(m5$lme, type="normalized"))

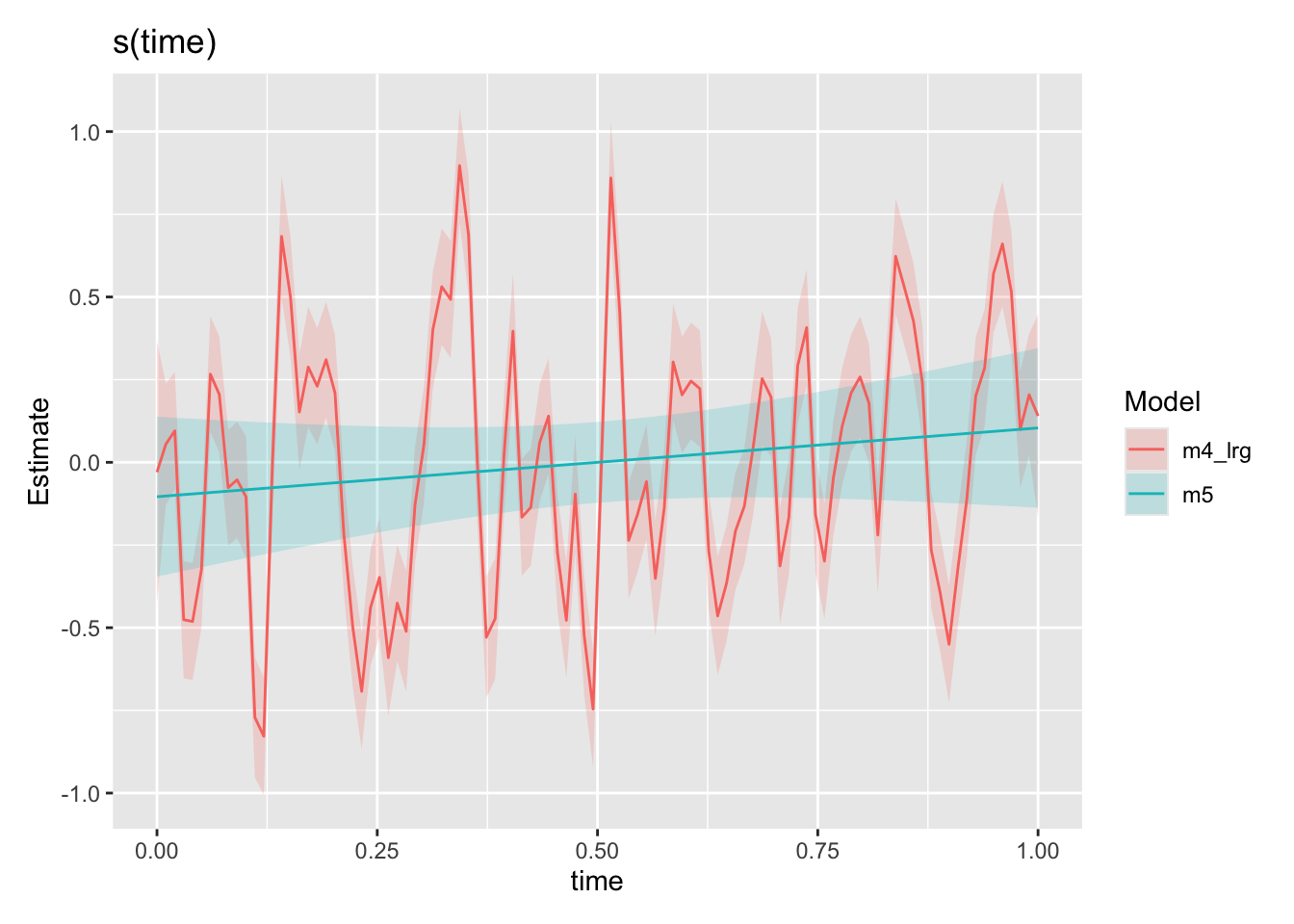

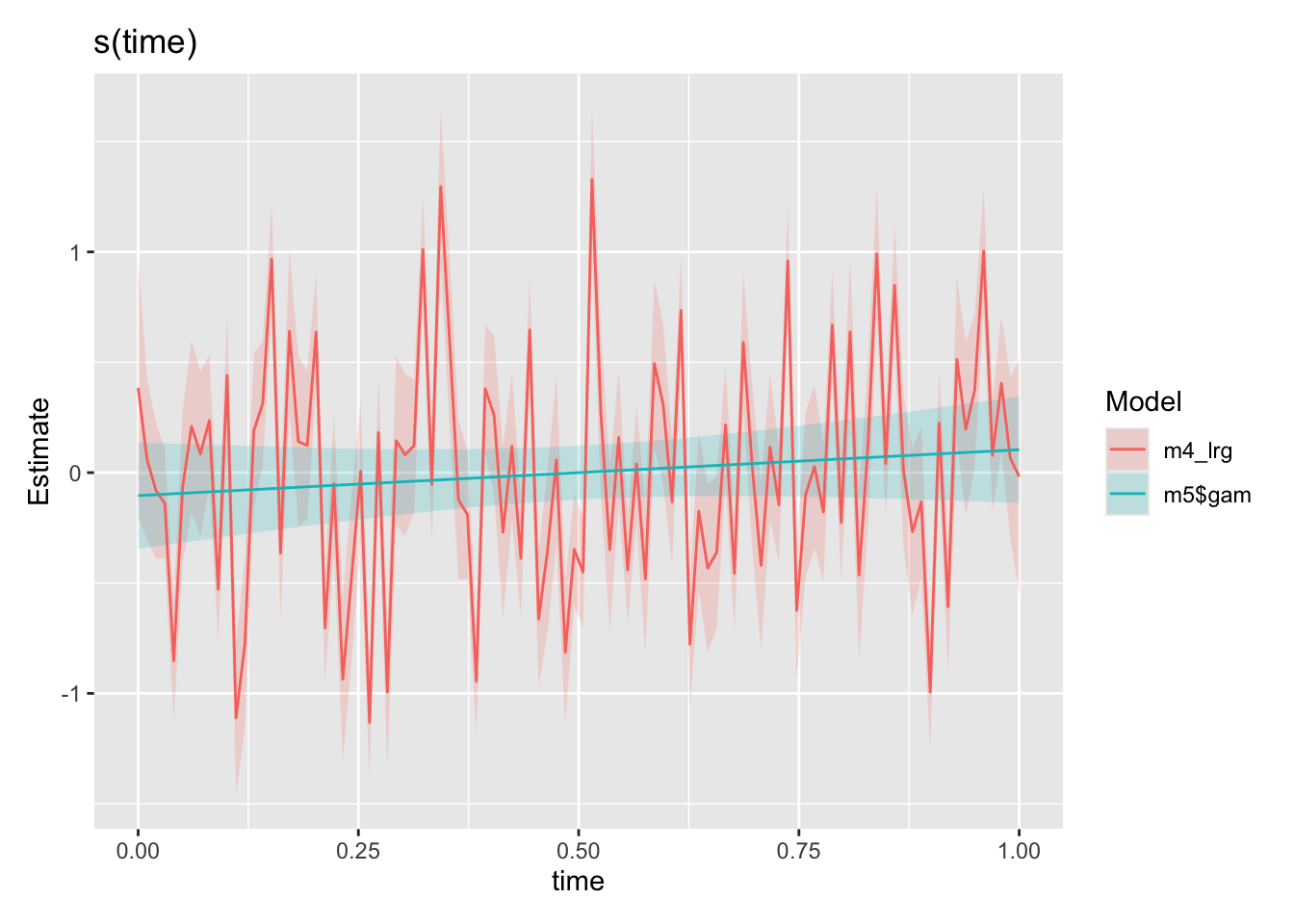

draw(compare_smooths(

m4_lrg, m5$gam, select = "s(time)"

))

Hey! Comparing our time smooths from our m4_lrg model above to our new m5 model (the same model just with AR1 residuals structure specified in gamm, also see “A small note” section), our time smooth is now looking much better. The model is no longer trying to fit the autocorrelation patterns using s(time) as expected. Lets go ahead and look at the smooths from our final model against the functions we used to create the synthetic data.

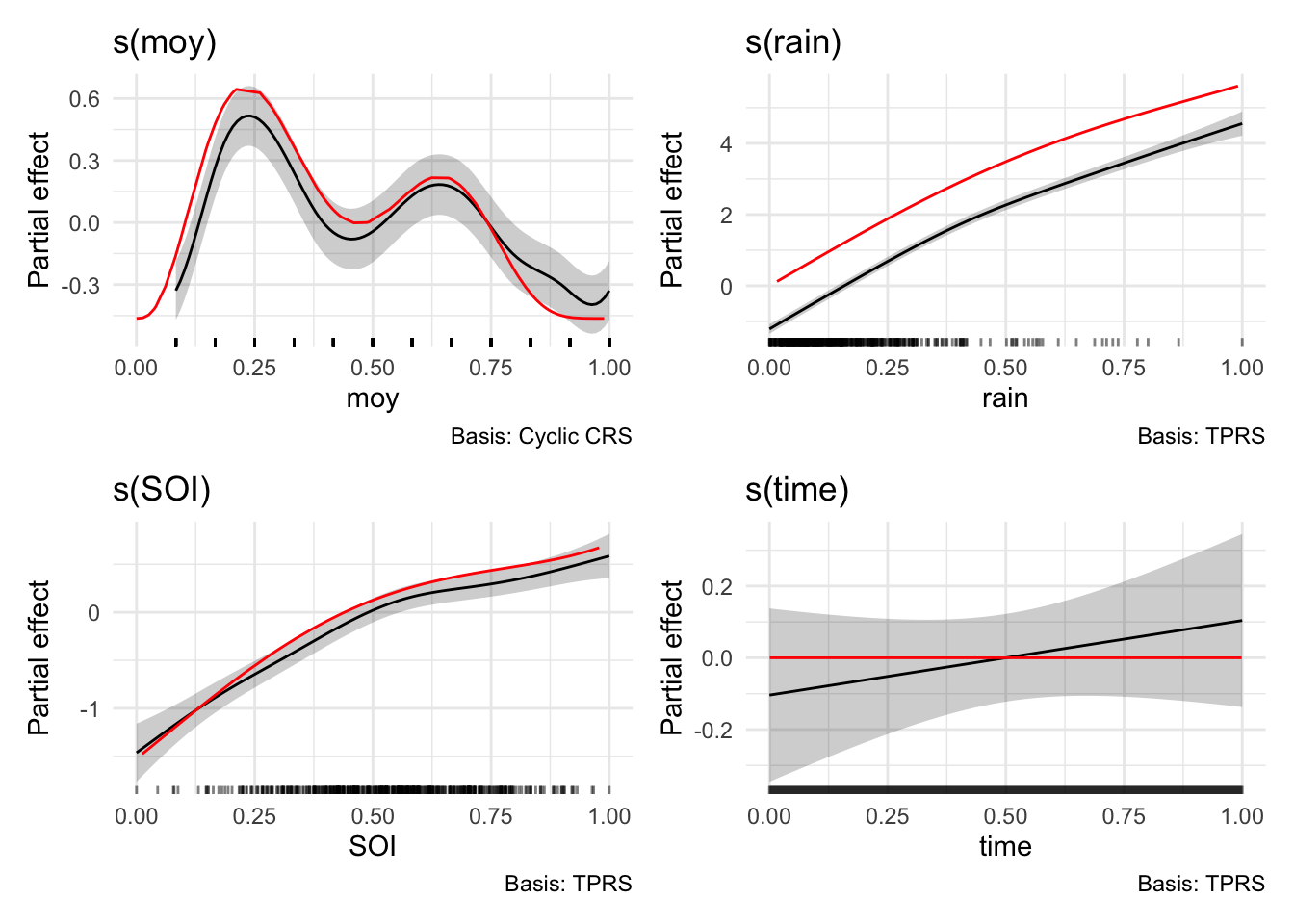

# get the "true" relationships

gt_data <- generate_gtdata()

# compare to the smooths from m5

wrap_plots(

draw(m5, select="s(moy)") +

geom_line(data=gt_data, aes(x = moy, y = f_moy), color="red") +

theme_minimal(),

draw(m5, select="s(rain)") +

geom_line(data=gt_data, aes(x = rain, y = f_rain), color="red") +

theme_minimal(),

draw(m5, select="s(SOI)") +

geom_line(data=gt_data, aes(x = SOI, y = f_SOI), color="red") +

theme_minimal(),

draw(m5, select="s(time)") +

geom_line(data=gt_data, aes(x = time, y = f_time), color="red") +

theme_minimal(),

ncol = 2

)

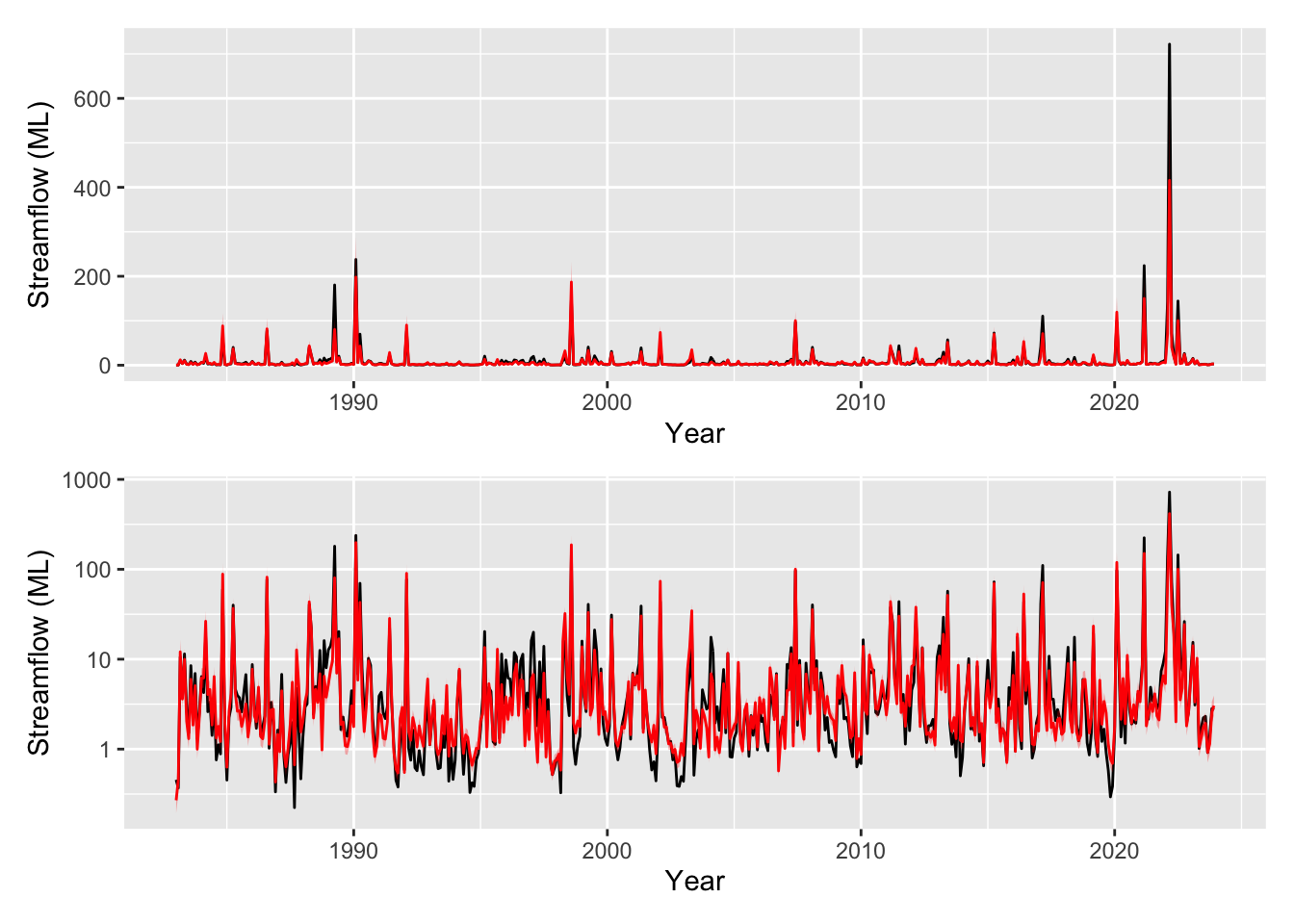

pred_plot <- plot_model_prediction(m5$gam, streamflow_data, exp_bool = T)

wrap_plots(

pred_plot,

pred_plot + scale_y_log10(),

ncol = 1

)

Looking excellent thanks to the wonders of synthetic data! Note that there was actually no underlying trend in the streamflow over time and our model now correctly picks that. The model still indicates that an average estimate is slightly increasing (take note of the models above to see how this could still be a valid explanation for the data) but it appropriately shows that it is in no way confident (p=0.329 so it is not identified as a significant smooth).

This is a good reminder, in light of all the model fits above, there are so many environmental processes working at different time scales that influence the signals we measure. We have to be really careful about what we are modelling, how we are modelling it, and what could be constituting any underlying trends that we try to measure in a lumped way (e.g., using a smooth on time). GAMs are a really neat tool, and are highly interpretable. But of course as with any model, it only answers the questions posed to it.

7 Further reading

- Nicholas Clark’s blog including this post on interpreting GAMs.

- Gavin Simpson’s blog including: smoothing with autoregressive residuals, decomposing timeseries with seasonal trends, and identifying periods with significant trends

mgcv’s very comprehensive documentation?mgcv- This excellent article mostly focused on hierarchical methods but also with very comprehensive descriptions of

?mgcv: Pedersen EJ, Miller DL, Simpson GL, Ross N. 2019. Hierarchical generalized additive models in ecology: an introduction with mgcv. PeerJ 7:e6876 https://doi.org/10.7717/peerj.6876

8 A small note

If you got this far, well done. As a reward: some nitpicking. gam and gamm fit the model differently (also note from ?gamm help: gamm is not as numerically stable as gam: an lme call will occasionally fail). Again the purpose of this post was just to demonstrate some of the issues using synthetic data, but I feel compelled to point out that m4_lrg fit with gam is not quite equivalent to m5 fit with gamm. Additionally, if you fit a gamm model with only k=30 knots for time, you will get a different (reduced complexity) fit than with gam. All the issues and solutions raised above still stand, and the point was to demonstrate that taking into account autocorrelation is important. But to hammer things home, we look at the k=100 case both with and without AR1 residuals with gamm and compare the time smooths.

You can see the gamm model with AR1 residuals holds up even in the face of a very flexible time smooth. The reason to do things correctly is not that you wont get the right answers sometimes, but rather that statistical beasts are always lurking in the shadows waiting to spoil your nice analysis.

# To drive the point home here are a huge number of knots

m4_lrg <- gamm(

log_flow ~ s(time, bs="tp", k=100) + s(moy, bs="cc") + s(rain) + s(SOI),

data = streamflow_data,

method = "REML",

family = gaussian(link="identity")

)

# fit again a model with a large number of knots for time, but now with AR1 residuals

m5 <- gamm(

log_flow ~ s(time, bs="tp", k=100) + s(moy, bs="cc") + s(rain) + s(SOI),

data = streamflow_data,

correlation = corAR1(), # our correlation structure

method = "REML",

family = gaussian(link="identity")

)

draw(compare_smooths(

m4_lrg, m5, select = "s(time)"

))